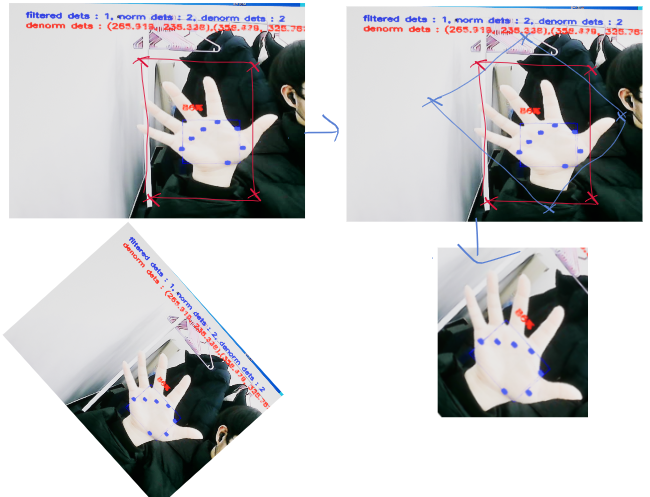

오탐 필터링하기

지난 글에 필터링 한다고 코드 추가했던거같지만

잘동작하지 않는데

좌표 값이 0에 가깝꺼니 0, 이미지보다 큰 경우가 발생

그래서 denormDets를 필터링하는 코드 추가

1. xmax, ymax가 10보다 작은경우 제거

2. xmin, ymin이 입력이미지(웹캠)보다 큰경우 제거

3. dets 영역이 너무 좁거나 50 x 40, 너무 큰경우 width0.7 x height0.7 제거

std::vector<Blaze::PalmDetection> Blaze::FilteringDets(std::vector<Blaze::PalmDetection> detections, int width, int height)

{

std::vector<Blaze::PalmDetection> filteredDets;

for (auto& denormDet : detections)

{

cv::Point2d startPt = cv::Point2d(denormDet.xmin, denormDet.ymin);

if (startPt.x < 10 || startPt.y < 10)

continue;

if (startPt.x > width || startPt.y > height)

continue;

int w = denormDet.xmax - denormDet.xmin;

int y = denormDet.ymax - denormDet.ymin;

if ((w * y < 50 * 40 )|| (w * y > (width * 0.7) * (height * 0.7)))

continue;

filteredDets.push_back(denormDet);

}

return filteredDets;

}

검출 성능이 아쉽긴하지만 위 조건은 잘 걸러내는편

검출결과 변환하기

이번에 할일은 검출결과를 변환

-> xy중심점, 스케일, 세타(방향각)을 반환

def detection2roi(self, detection):

""" Convert detections from detector to an oriented bounding box.

Adapted from:

# mediapipe/modules/face_landmark/face_detection_front_detection_to_roi.pbtxt

The center and size of the box is calculated from the center

of the detected box. Rotation is calcualted from the vector

between kp1 and kp2 relative to theta0. The box is scaled

and shifted by dscale and dy.

"""

if self.detection2roi_method == 'box':

# compute box center and scale

# use mediapipe/calculators/util/detections_to_rects_calculator.cc

xc = (detection[:,1] + detection[:,3]) / 2

yc = (detection[:,0] + detection[:,2]) / 2

scale = (detection[:,3] - detection[:,1]) # assumes square boxes

elif self.detection2roi_method == 'alignment':

# compute box center and scale

# use mediapipe/calculators/util/alignment_points_to_rects_calculator.cc

xc = detection[:,4+2*self.kp1]

yc = detection[:,4+2*self.kp1+1]

x1 = detection[:,4+2*self.kp2]

y1 = detection[:,4+2*self.kp2+1]

scale = ((xc-x1)**2 + (yc-y1)**2).sqrt() * 2

else:

raise NotImplementedError(

"detection2roi_method [%s] not supported"%self.detection2roi_method)

yc += self.dy * scale

scale *= self.dscale

# compute box rotation

x0 = detection[:,4+2*self.kp1]

y0 = detection[:,4+2*self.kp1+1]

x1 = detection[:,4+2*self.kp2]

y1 = detection[:,4+2*self.kp2+1]

#theta = np.arctan2(y0-y1, x0-x1) - self.theta0

theta = torch.atan2(y0-y1, x0-x1) - self.theta0

return xc, yc, scale, theta

블라즈해더에 필요한 변수, 함수선언

// Fill out your copyright notice in the Description page of Project Settings.

#pragma once

#define _USE_MATH_DEFINES

#include <math.h>

#include "PreOpenCVHeaders.h"

#include <opencv2/opencv.hpp>

#include "PostOpenCVHeaders.h"

#include "CoreMinimal.h"

/**

*

*/

class HANDDESKTOP_API Blaze

{

public:

Blaze();

~Blaze();

cv::dnn::Net blazePalm;

cv::dnn::Net blazeHand;

//for resize and pad

void ResizeAndPad(

cv::Mat& srcimg, cv::Mat& img256,

cv::Mat& img128, float& scale, cv::Scalar& pad

);

// var and funcs for blazepalm

struct PalmDetection {

float ymin;

float xmin;

float ymax;

float xmax;

cv::Point2d kp_arr[7];

float score;

};

int blazePalmSize = 128;

float palmMinScoreThresh = 0.4;

float palmMinNMSThresh = 0.2;

int palmMinNumKeyPoints = 7;

std::vector<PalmDetection> PredictPalmDetections(cv::Mat& img);

PalmDetection GetPalmDetection(cv::Mat regressor, cv::Mat classificator,

int stride, int anchor_count, int column, int row, int anchor, int offset);

float sigmoid(float x);

std::vector<PalmDetection> DenormalizePalmDetections(std::vector<PalmDetection> detections, int width, int height, cv::Scalar pad);

void DrawPalmDetections(cv::Mat& img, std::vector<Blaze::PalmDetection> denormDets);

std::vector<PalmDetection> FilteringDets(std::vector<PalmDetection> detections, int width, int height);

//var and func for blazehand

void Detections2ROI(std::vector<Blaze::PalmDetection> dets,

std::vector<float>& vec_xc, std::vector<float>& vec_yc,

std::vector<float>& vec_scale, std::vector<float>& vec_theta

);

float blazePalmDy = -0.5;

float blazePalmDScale = 2.6;

float blazePalmTheta0 = M_PI / 2;

};

센터점, 스케일, 방향 계산코드 작성

void Blaze::Detections2ROI(std::vector<Blaze::PalmDetection> dets, std::vector<float>& vec_xc, std::vector<float>& vec_yc, std::vector<float>& vec_scale, std::vector<float>& vec_theta)

{

for (int i = 0; i < dets.size(); i++)

{

Blaze::PalmDetection det = dets.at(i);

float xc = (det.xmax + det.xmin) / 2;

float yc = (det.ymax + det.ymin) / 2;

float scale = (det.xmax - det.xmin); //assumes square box

yc = blazePalmDy * scale;

scale *= blazePalmDScale;

//compute box rot

float theta = std::atan2(det.kp_arr[0].y - det.kp_arr[1].y, det.kp_arr[0].x - det.kp_arr[1].x) - blazePalmTheta0;

vec_xc.push_back(xc);

vec_yc.push_back(yc);

vec_scale.push_back(scale);

vec_theta.push_back(theta);

}

}

det2roi로 중심점, 스케일, 세타들가지고 입력텐서, 역어파인변환한 이미지, 박스를 가져옴

xc, yc, scale, theta = palm_detector.detection2roi(palm_detections.cpu())

img, affine2, box2 = hand_regressor.extract_roi(frame, xc, yc, theta, scale)

def extract_roi(self, frame, xc, yc, theta, scale):

# take points on unit square and transform them according to the roi

points = torch.tensor([[-1, -1, 1, 1],

[-1, 1, -1, 1]], device=scale.device).view(1,2,4)

points = points * scale.view(-1,1,1)/2

theta = theta.view(-1, 1, 1)

R = torch.cat((

torch.cat((torch.cos(theta), -torch.sin(theta)), 2),

torch.cat((torch.sin(theta), torch.cos(theta)), 2),

), 1)

center = torch.cat((xc.view(-1,1,1), yc.view(-1,1,1)), 1)

points = R @ points + center

# use the points to compute the affine transform that maps

# these points back to the output square

res = self.resolution

points1 = np.array([[0, 0, res-1],

[0, res-1, 0]], dtype=np.float32).T

affines = []

imgs = []

for i in range(points.shape[0]):

pts = points[i, :, :3].cpu().numpy().T

M = cv2.getAffineTransform(pts, points1)

img = cv2.warpAffine(frame, M, (res,res))#, borderValue=127.5)

img = torch.tensor(img, device=scale.device)

imgs.append(img)

affine = cv2.invertAffineTransform(M).astype('float32')

affine = torch.tensor(affine, device=scale.device)

affines.append(affine)

if imgs:

imgs = torch.stack(imgs).permute(0,3,1,2).float() / 255.#/ 127.5 - 1.0

affines = torch.stack(affines)

else:

imgs = torch.zeros((0, 3, res, res), device=scale.device)

affines = torch.zeros((0, 2, 3), device=scale.device)

return imgs, affines, points

대충 이런식으로 한다는 코드인것같긴한데

여러 이미지에 대한 점들을 한번에 처리하는 파이썬 코드를

다중 처리는 힘드니 개별 처리하도록 c++로 구현해야함

보다보니 정리된게

img는 역 어파인변환된 이미지, 블라즈 핸드 추론용

affine은 역어파인 행렬, 추론결과(정방향 좌표들) 반정규화하는데(정방향을 실제이미지방향으로) 사용

box는 손감싸는 박스 좌표

img, affine2, box2 = hand_regressor.extract_roi(frame, xc, yc, theta, scale)

if len(img) != 0:

print(img.shape)

# 파이토치 텐서를 넘파이 배열로 변환

array = img.squeeze().numpy()

array = np.transpose(array, (1, 2, 0))

print(array.shape)

print()

# 넘파이 배열을 이미지로 변환

image = cv2.cvtColor(array, cv2.COLOR_RGB2BGR)

# 이미지를 표시

cv2.imshow("Image", image)

flags2, handed2, normalized_landmarks2 = hand_regressor(img.to(gpu))

landmarks2 = hand_regressor.denormalize_landmarks(normalized_landmarks2.cpu(), affine2)

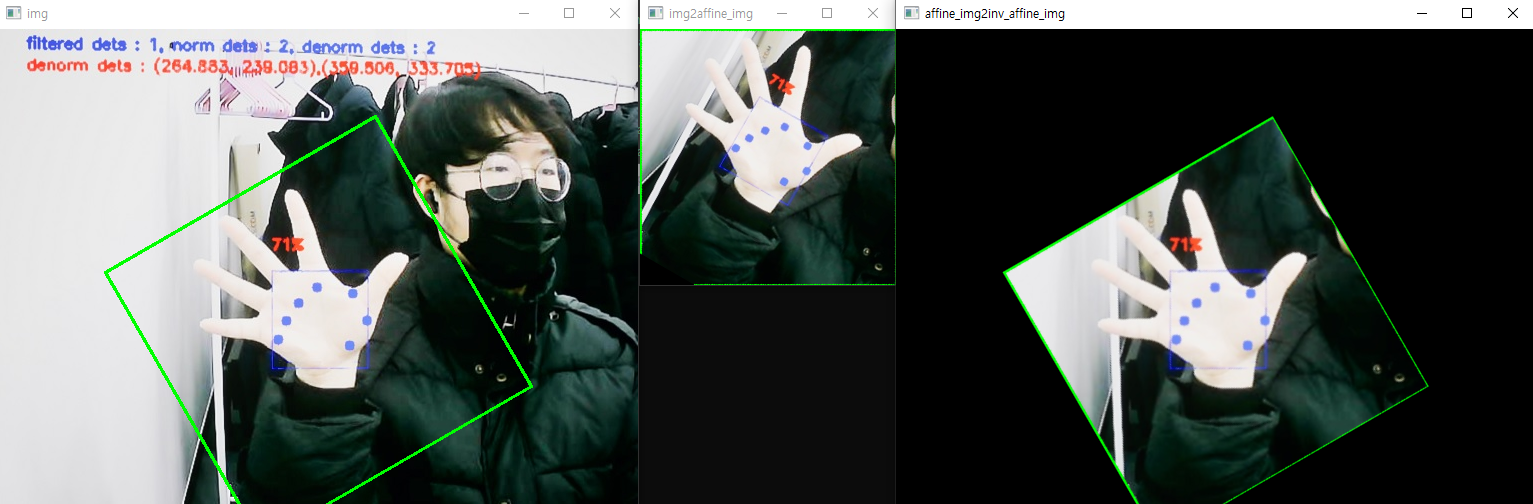

어파인변환이미지, 역어파인변환행렬, 박스좌표(기울어진)를 구하기 위해 별도 테스트 코드 작성

1. 원본 이미지

2. 어파인 변환 이미지

3. 어파인 변환이미지 -> 역어파인변환결과

#include <opencv2/opencv.hpp>

int main() {

cv::Mat img = cv::imread("testimg.jpg");

float theta = -30;

cv::Rect box(260, 240, 120, 120);

float scale = 2.6;

// 회전 중심점 계산

cv::Point2f center(box.x + box.width / 2, box.y + box.height / 2);

// 회전 변환 행렬 계산

cv::Mat rotationMatrix = cv::getRotationMatrix2D(center, theta, 1.0);

// 원래 detection 꼭지점

cv::Point2f originaDetPoints[4] = {

cv::Point2f(box.x, box.y),

cv::Point2f(box.x + box.width, box.y),

cv::Point2f(box.x + box.width, box.y + box.height),

cv::Point2f(box.x, box.y + box.height)

};

// 회전된 상자의 꼭지점 계산

cv::Point2f startRotatedPoints[4];

// 목표 꼭지점(블라즈 핸드는 256이므로

cv::Point2f targetRotatedPoint[4] = {

cv::Point2f(0, 0),

cv::Point2f(256, 0),

cv::Point2f(256, 256),

cv::Point2f(0, 256)

};

for (int i = 0; i < 4; i++)

{

cv::Point2f startRotatedPoint = originaDetPoints[i] - center;

float x = startRotatedPoint.x * std::cos(theta * CV_PI / 180) - startRotatedPoint.y * std::sin(theta * CV_PI / 180);

float y = startRotatedPoint.x * std::sin(theta * CV_PI / 180) + startRotatedPoint.y * std::cos(theta * CV_PI / 180);

x = x * scale;

y = y * scale;

startRotatedPoints[i] = cv::Point2f(x, y) + center;

}

// 회전된 상자 그리기

for (int i = 0; i < 4; i++) {

cv::line(img, startRotatedPoints[i], startRotatedPoints[(i + 1) % 4], cv::Scalar(0, 255, 0), 2);

}

// 어파인 변환 행렬 계산

cv::Mat affineTransform_Mat = cv::getAffineTransform(startRotatedPoints, targetRotatedPoint);

cv::Mat inv_affine_tranform_Mat;

cv::invertAffineTransform(affineTransform_Mat, inv_affine_tranform_Mat);

// 역/정 어파인변환 행렬로 이미지 변환

cv::Mat affine_img;

cv::Mat inv_affine_img;

cv::warpAffine(img, affine_img, affineTransform_Mat, cv::Size(256, 256));

cv::warpAffine(affine_img, inv_affine_img, inv_affine_tranform_Mat, img.size());

// 결과 이미지 출력

cv::imshow("img", img);

cv::imshow("img2affine_img", affine_img);

cv::imshow("affine_img2inv_affine_img", inv_affine_img);

cv::waitKey(0);

cv::destroyAllWindows();

return 0;

}

이 코드를 언리엘에 적합하게 수정

블라즈 헤더에 핸드를 위한 변수, 함수 다시 정리

//var and func for blazehand

void Detections2ROI(std::vector<Blaze::PalmDetection> dets,

std::vector<float>& vec_xc, std::vector<float>& vec_yc,

std::vector<float>& vec_scale, std::vector<float>& vec_theta

);

float blazePalmDy = -0.5;

float blazePalmDScale = 2.6;

float blazePalmTheta0 = CV_PI / 2;

void extract_roi(cv::Mat frame, std::vector<float>& vec_xc, std::vector<float>& vec_yc,

std::vector<float>& vec_scale, std::vector<float>& vec_theta, std::vector<PalmDetection> denormDets,

std::vector<cv::Mat>& imgs, std::vector<cv::Mat>& affines, std::vector<BoxROI>& boxROIs);

int blazeHandSize = 256;

};

디텍션즈투ROI에서 scale이 잘 맞지 않아 디폴트스케일값으로 설정

센터, 스케일, 세타 벡터에 담기

void Blaze::Detections2ROI(std::vector<Blaze::PalmDetection> dets, std::vector<float>& vec_xc, std::vector<float>& vec_yc, std::vector<float>& vec_scale, std::vector<float>& vec_theta)

{

for (int i = 0; i < dets.size(); i++)

{

Blaze::PalmDetection det = dets.at(i);

float xc = (det.xmax + det.xmin) / 2;

float yc = (det.ymax + det.ymin) / 2;

float scale = blazePalmDScale;

/*

float scale = (det.xmax - det.xmin); //assumes square box

yc = blazePalmDy * scale;

scale *= blazePalmDScale;

*/

//compute box rot

float theta = std::atan2(det.kp_arr[0].y - det.kp_arr[1].y, det.kp_arr[0].x - det.kp_arr[1].x) - blazePalmTheta0;

vec_xc.push_back(xc);

vec_yc.push_back(yc);

vec_scale.push_back(scale);

vec_theta.push_back(theta);

}

}

기존 센터 좌표, 세타, 스케일 가져오기

센터 점과 세타로 회전행렬 취득

원래 검출 점들 준비(작은 사각형)

roi 점들에 원래 검출점 잠깐담고

void Blaze::extract_roi(cv::Mat frame, std::vector<float>& vec_xc, std::vector<float>& vec_yc,

std::vector<float>& vec_scale, std::vector<float>& vec_theta, std::vector<Blaze::PalmDetection> denormDets,

std::vector<cv::Mat>& vec_img, std::vector<cv::Mat>& vec_affine, std::vector<BoxROI>& vec_boxROI)

{

cv::Mat img = frame.clone();

for (int i = 0; i < vec_xc.size(); i++)

{

cv::Point2f center = cv::Point2f(vec_xc.at(i), vec_yc.at(i));

Blaze::PalmDetection denormDet = denormDets.at(i);

float theta = vec_theta.at(i);

theta = theta * 180 / CV_PI;

float scale = vec_scale.at(i);

// 회전 변환 행렬 계산

cv::Mat rotationMatrix = cv::getRotationMatrix2D(center, theta, 1.0);

// 원래 detection 꼭지점

cv::Point2f originaDetPoints[4] = {

cv::Point2f(denormDet.xmin, denormDet.ymin),

cv::Point2f(denormDet.xmax, denormDet.ymin),

cv::Point2f(denormDet.xmax, denormDet.ymax),

cv::Point2f(denormDet.xmin, denormDet.ymax)

};

// 회전된 상자의 꼭지점 계산

cv::Point2f startRotatedPoints[4];

// 목표 꼭지점(블라즈 핸드는 256이므로

cv::Point2f targetRotatedPoint[4] = {

cv::Point2f(0, 0),

cv::Point2f(256, 0),

cv::Point2f(256, 256),

cv::Point2f(0, 256)

};

Blaze::BoxROI roi;

for (int j = 0; j < 4; j++)

{

cv::Point2f startRotatedPoint = originaDetPoints[j] - center;

float x = startRotatedPoint.x * std::cos(theta * CV_PI / 180) - startRotatedPoint.y * std::sin(theta * CV_PI / 180);

float y = startRotatedPoint.x * std::sin(theta * CV_PI / 180) + startRotatedPoint.y * std::cos(theta * CV_PI / 180);

x = x * scale;

y = y * scale;

startRotatedPoints[j] = cv::Point2f(x, y) + center;

roi.pts[j] = startRotatedPoints[j];

}

// 어파인 변환 행렬 계산

cv::Mat affineTransform_Mat = cv::getAffineTransform(startRotatedPoints, targetRotatedPoint);

cv::Mat inv_affine_tranform_Mat;

cv::invertAffineTransform(affineTransform_Mat, inv_affine_tranform_Mat);

// 정 어파인변환 행렬로 이미지 변환

cv::Mat affine_img;

cv::warpAffine(img, affine_img, affineTransform_Mat, cv::Size(blazeHandSize, blazeHandSize));

/*

std::ostringstream oss_info;

oss_info << "center(" << center.x << "," << center.y << "), scale : " << scale;

std::string oss_info_str = oss_info.str();

cv::putText(frame, oss_info_str, cv::Point(30, 370), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255, 0, 255), 2);

std::ostringstream oss_originaDetPoints;

oss_originaDetPoints << "originaDetPoints pt0(" << originaDetPoints[0].x << "," << originaDetPoints[0].y << ")"

<< ", pt1(" << originaDetPoints[1].x << ", " << originaDetPoints[1].y << ")";

std::string originaDetPoints_str = oss_originaDetPoints.str();

cv::putText(frame, originaDetPoints_str, cv::Point(30, 400), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255, 0, 255), 2);

std::ostringstream oss_startRotatedPoints;

oss_startRotatedPoints << "startRotatedPoint pt0(" << startRotatedPoints[0].x << "," << startRotatedPoints[0].y<<")"

<< ", pt1(" << startRotatedPoints[1].x << ", " << startRotatedPoints[1].y << ")";

std::string startRotatedPoints_str = oss_startRotatedPoints.str();

cv::putText(frame, startRotatedPoints_str, cv::Point(30, 420), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255, 0, 255), 2);

*/

vec_img.push_back(affine_img);

vec_affine.push_back(inv_affine_tranform_Mat);

vec_boxROI.push_back(roi);

}

}

리드프레임엔 웹캠 이미지에 어파인 변환 손영상을 복붙하도록 수정

void ADesktopGameModeBase::ReadFrame()

{

if (!capture.isOpened())

{

return;

}

capture.read(webcamImage);

/*

get filtered detections

*/

blaze.ResizeAndPad(webcamImage, img256, img128, scale, pad);

//UE_LOG(LogTemp, Log, TEXT("scale value: %f, pad value: (%f, %f)"), scale, pad[0], pad[1]);

std::vector<Blaze::PalmDetection> normDets = blaze.PredictPalmDetections(img128);

std::vector<Blaze::PalmDetection> denormDets = blaze.DenormalizePalmDetections(normDets, webcamWidth, webcamHeight, pad);

std::vector<Blaze::PalmDetection> filteredDets = blaze.FilteringDets(denormDets, webcamWidth, webcamHeight);

/*

get affined hand img, affine mat, ROI

*/

std::vector<float> vec_xc;

std::vector<float> vec_yc;

std::vector<float> vec_scale;

std::vector<float> vec_theta;

blaze.Detections2ROI(filteredDets, vec_xc, vec_yc, vec_scale, vec_theta);

std::vector<cv::Mat> vec_img;

std::vector<cv::Mat> vec_affine;

std::vector<Blaze::BoxROI> vec_boxROI;

blaze.extract_roi(webcamImage, vec_xc, vec_yc,

vec_scale, vec_theta, filteredDets,

vec_img, vec_affine, vec_boxROI);

/*

Draw

*/

blaze.DrawPalmDetections(webcamImage, filteredDets);

std::string dets_size_str = "filtered dets : " + std::to_string(filteredDets.size()) + ", norm dets : " + std::to_string(normDets.size()) + ", denorm dets : " + std::to_string(denormDets.size());

cv::putText(webcamImage, dets_size_str, cv::Point(30, 30), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255, 0, 0), 2);

for (int i = 0; i < filteredDets.size(); i++)

{

auto& det = filteredDets.at(i);

std::ostringstream oss;

oss << "denorm dets : (" << det.xmin << ", " << det.ymin << "),(" <<

det.xmax << ", " << det.ymax << "), center : " << vec_xc.at(i) <<","<<vec_yc.at(i);

std::string det_str = oss.str();

cv::putText(webcamImage, det_str, cv::Point(30, 50 + 20 * i), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 255), 2);

}

std::ostringstream oss_vec;

oss_vec << "vec_xc : " << vec_xc.size() << ", vec_scale : " << vec_scale.size() << ", vec_theta" << vec_theta.size();

std::string oss_vec_str = oss_vec.str();

cv::putText(webcamImage, oss_vec_str, cv::Point(30, 450), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 255), 2);

std::ostringstream oss_mat;

oss_mat << "vec_img : " << vec_img.size() << ", vec_affine : " << vec_affine.size() << ", vec_boxROI" << vec_boxROI.size();

std::string oss_mat_str = oss_mat.str();

cv::putText(webcamImage, oss_mat_str, cv::Point(30, 465), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 255), 2);

for (int i = 0; i < vec_img.size(); i++)

{

cv::Mat tmp = vec_img.at(i);

cv::resize(tmp, tmp, cv::Size(100, 100));

cv::Mat roi = webcamImage(cv::Rect(400 + (i * 100), 300, 100, 100));

tmp.copyTo(roi);

}

/*

blaze.DrawPalmDetections(img128, normDets);

cv::Mat roi1 = webcamImage(cv::Rect(1000, 500, img128.cols, img128.rows));

img128.copyTo(roi1);

*/

MatToTexture2D(webcamImage);

/*

모니터 시각화

*/

ScreensToCVMats();

CVMatsToTextures();

}

원래 extract roi 한 이후에는 추론하고 시각화가지만

먼저 roi 드로잉부터 구현해보면

xc, yc, scale, theta = palm_detector.detection2roi(palm_detections.cpu())

img, affine2, box2 = hand_regressor.extract_roi(frame, xc, yc, theta, scale)

if len(img) != 0:

print(img.shape)

# 파이토치 텐서를 넘파이 배열로 변환

array = img.squeeze().numpy()

array = np.transpose(array, (1, 2, 0))

print(array.shape)

print()

# 넘파이 배열을 이미지로 변환

image = cv2.cvtColor(array, cv2.COLOR_RGB2BGR)

# 이미지를 표시

cv2.imshow("Image", image)

flags2, handed2, normalized_landmarks2 = hand_regressor(img.to(gpu))

landmarks2 = hand_regressor.denormalize_landmarks(normalized_landmarks2.cpu(), affine2)

for i in range(len(flags)):

landmark, flag = landmarks[i], flags[i]

if flag>.5:

draw_landmarks(frame, landmark[:,:2], FACE_CONNECTIONS, size=1)

for i in range(len(flags2)):

landmark, flag = landmarks2[i], flags2[i]

if flag>.5:

draw_landmarks(frame, landmark[:,:2], HAND_CONNECTIONS, size=2)

draw_roi(frame, box)

draw_roi(frame, box2)

draw_detections(frame, face_detections)

draw_detections(frame, palm_detections)

블라즈 헤더에 드로 roi 함수 추가하고

void extract_roi(cv::Mat frame, std::vector<float>& vec_xc, std::vector<float>& vec_yc,

std::vector<float>& vec_scale, std::vector<float>& vec_theta, std::vector<PalmDetection> denormDets,

std::vector<cv::Mat>& imgs, std::vector<cv::Mat>& affines, std::vector<BoxROI>& boxROIs);

int blazeHandSize = 256;

void DrawROI(cv::Mat& frame, std::vector<BoxROI> vec_box);

};

드로 roi 구현하고

void Blaze::DrawROI(cv::Mat& frame, std::vector<Blaze::BoxROI> vec_box)

{

for (auto& roi : vec_box)

{

cv::line(frame, roi.pts[0], roi.pts[1], cv::Scalar(125, 0, 0), 2);

cv::line(frame, roi.pts[1], roi.pts[2], cv::Scalar(125, 0, 0), 2);

cv::line(frame, roi.pts[2], roi.pts[3], cv::Scalar(125, 0, 0), 2);

cv::line(frame, roi.pts[3], roi.pts[0], cv::Scalar(125, 0, 0), 2);

}

}

데스크탑게임모드베이스 맨 끝에 드로ROI 추가

for (int i = 0; i < vec_img.size(); i++)

{

cv::Mat tmp = vec_img.at(i);

cv::resize(tmp, tmp, cv::Size(100, 100));

cv::Mat roi = webcamImage(cv::Rect(500 + (i * 100), 350, 100, 100));

tmp.copyTo(roi);

}

blaze.DrawROI(webcamImage, vec_boxROI);

MatToTexture2D(webcamImage);

/*

모니터 시각화

*/

ScreensToCVMats();

CVMatsToTextures();

}

손을 놓칠때가 많긴 하지만 일단 ROI 좌표도 잘 가져와서 드로잉 됨

손가락이 약간 잘리니 디폴트 스케일 값을 이렇게 수정

float blazePalmDScale = 3;

'컴퓨터과학 > 언리얼' 카테고리의 다른 글

| HandDesktop09 - 기존 문제들 원인 찾음, blaze 갈아엎기, 블라즈팜 문제해결 (0) | 2024.01.26 |

|---|---|

| HandDesktop08 - blaze hand 사용하기 (0) | 2024.01.26 |

| HandDesktop06 - blaze palm 사용하기 (0) | 2024.01.25 |

| HandDesktop05 - 손 추정을 위한 blaze 추론 코드 살펴보기 (0) | 2024.01.24 |

| HandDesktop04 - 다중 모니터 구현하기 (0) | 2024.01.24 |