이전 글에서 파이썬으로 왼손 랜드마크 데이터를 어떻게 반정규화 시킬지를 다루어서

이제 c++로 구현하려고 한다.

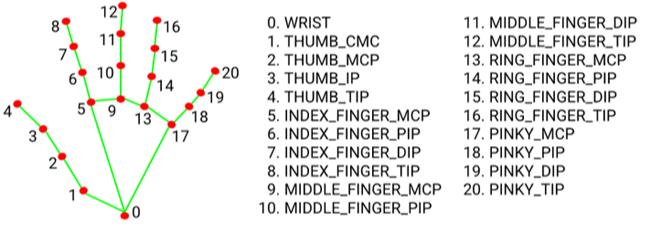

근데 먼저할께 블라즈 핸드로 찾은 손이 왼손인지 오른손인지 먼저 판단할 필요가 있어보인다.

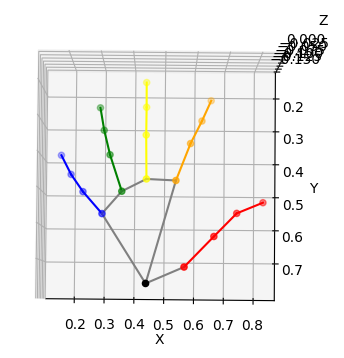

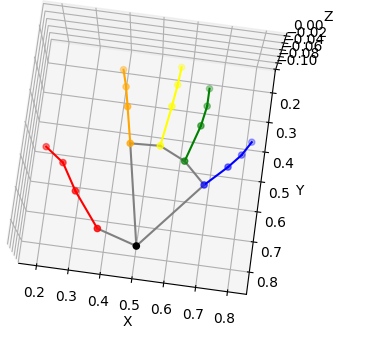

지난글에 만든 반정규화 , 시각화 코드

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

%matplotlib widget

# 3차원 산점도 그리기

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

denormalized_landmark = normalized_landmark.copy()

denormalized_landmark[:,0] = 16.5 * (normalized_landmark[:, 1] - normalized_landmark[0, 1]) * -1

denormalized_landmark[:,1] = (normalized_landmark[:, 0] - 0.5) * 11.6 * -1

denormalized_landmark[:,2] = normalized_landmark[:, 2] * 11.6 * -1

x = denormalized_landmark[:, 0]

y = denormalized_landmark[:, 1]

z = denormalized_landmark[:, 2]

# 색상 지정

colors = ['black'] + ['red'] * 4 + ['orange'] * 4 + ['yellow'] * 4 + ['green'] * 4 + ['blue'] * 4

ax.scatter(x, y, z, c=colors)

#손가락

colors = ['red', 'orange', 'yellow', 'green', 'blue']

groups = [[1, 2, 3, 4], [5, 6, 7, 8], [9, 10, 11, 12], [13, 14, 15, 16], [17, 18, 19, 20]]

for i, group in enumerate(groups):

for j in range(len(group)-1):

plt.plot([x[group[j]], x[group[j+1]]], [y[group[j]], y[group[j+1]]], [z[group[j]], z[group[j+1]]], color=colors[i])

#손등

lines = [[0, 1], [0, 5], [0, 17], [5, 9], [9, 13], [13, 17]]

for line in lines:

ax.plot([x[line[0]], x[line[1]]], [y[line[0]], y[line[1]]], [z[line[0]], z[line[1]]], color='gray')

ax.set_xlabel('X')

ax.set_ylabel('Y')

ax.set_zlabel('Z')

ax.set_xlim(-1, 17)

ax.set_ylim(7, -7)

ax.set_zlim(0, 2)

plt.show()

기존의 블라즈에 만든 반정규화 코드는

추론에 사용한 상자 폭/너비을 곱해준다음 위치 조정을 위해 xy를 더해주는 식으로 만들었는데

std::vector<cv::Mat> Blaze::DenormalizeHandLandmarks(std::vector<cv::Mat> imgs_landmarks, std::vector<cv::Rect> rects)

{

std::vector<cv::Mat> denorm_imgs_landmarks;

for (int i = 0; i < imgs_landmarks.size(); i++)

{

cv::Mat landmarks = imgs_landmarks.at(i);

cv::Rect rect = rects.at(i);

cv::Mat squeezed = landmarks.reshape(0, landmarks.size[1]);

//std::cout << "squeezed size: " << squeezed.size[0] << "," << squeezed.size[1] << std::endl;

for (int j = 0; j < squeezed.size[0]; j++)

{

squeezed.at<float>(j, 0) = squeezed.at<float>(j, 0) * rect.width + rect.x;

squeezed.at<float>(j, 1) = squeezed.at<float>(j, 1) * rect.height + rect.y;

squeezed.at<float>(j, 2) = squeezed.at<float>(j, 2) * rect.height * -1;

}

denorm_imgs_landmarks.push_back(squeezed);

}

//std::cout << std::endl;

return denorm_imgs_landmarks;

}

블라즈 헤더에 이렇게 추가하고

// var, funs for left, right lms and bone location

float skeletonXRatio = 16.5;

float skeletonYRatio = 11.6;

cv::Mat handLeft;

cv::Mat handRight;

void DenormalizeHandLandmarksForBoneLocation(std::vector<cv::Mat> imgs_landmarks);

};

21행, 3열로 초기화할때 설정

Blaze::Blaze()

{

this->blazePalm = cv::dnn::readNet("c:/blazepalm_old.onnx");

this->blazeHand = cv::dnn::readNet("c:/blazehand.onnx");

handLeft = cv::Mat(21, 3, CV_32FC1);

handRight = cv::Mat(21, 3, CV_32FC1);

}

구현은 이런식으로 하고

중간에 좌측 손인지 우측손인지 판단해야하는데

void Blaze::DenormalizeHandLandmarksForBoneLocation(std::vector<cv::Mat> imgs_landmarks)

{

for (int i = 0; i < imgs_landmarks.size(); i++)

{

cv::Mat landmarks = imgs_landmarks.at(i);

cv::Mat squeezed = landmarks.reshape(0, landmarks.size[1]);

//std::cout << "squeezed size: " << squeezed.size[0] << "," << squeezed.size[1] << std::endl;

for (int j = 0; j < squeezed.size[0]; j++)

{

squeezed.at<float>(j, 0) = (squeezed.at<float>(j, 1) - squeezed.at<float>(0, 1)) * skeletonXRatio;

squeezed.at<float>(j, 1) = (squeezed.at<float>(j, 0) - 0.5) * skeletonYRatio * -1;

squeezed.at<float>(j, 2) = squeezed.at<float>(j, 2) * skeletonYRatio * -1;

}

}

}

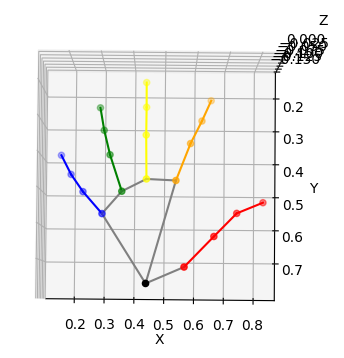

전혀 조정하지 않은 정규화된 데이터 기준으로 생각하자

왼손의 경우 엄지손끝 4번 x가 새끼손끝 20번 x보다 크므로

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

%matplotlib widget

# 3차원 산점도 그리기

fig = plt.figure()

ax = fig.add_subplot(111, projection='3d')

x = normalized_landmark[:, 0]

y = normalized_landmark[:, 1]

z = normalized_landmark[:, 2]

# 색상 지정

colors = ['black'] + ['red'] * 4 + ['orange'] * 4 + ['yellow'] * 4 + ['green'] * 4 + ['blue'] * 4

ax.scatter(x, y, z, c=colors)

#손가락

colors = ['red', 'orange', 'yellow', 'green', 'blue']

groups = [[1, 2, 3, 4], [5, 6, 7, 8], [9, 10, 11, 12], [13, 14, 15, 16], [17, 18, 19, 20]]

for i, group in enumerate(groups):

for j in range(len(group)-1):

plt.plot([x[group[j]], x[group[j+1]]], [y[group[j]], y[group[j+1]]], [z[group[j]], z[group[j+1]]], color=colors[i])

#손등

lines = [[0, 1], [0, 5], [0, 17], [5, 9], [9, 13], [13, 17]]

for line in lines:

ax.plot([x[line[0]], x[line[1]]], [y[line[0]], y[line[1]]], [z[line[0]], z[line[1]]], color='gray')

ax.set_xlabel('X')

ax.set_ylabel('Y')

ax.set_zlabel('Z')

plt.gca().invert_xaxis()

plt.show()

isLefthand 함수로 정규화된 랜드마크 받아서

결과에 따라 왼손맷 오른손맷에 저장

오른손의 경우 컴포넌트 공간에서 왼손이랑 다른탓에 좀다르게 반정규화해야하지만

일단 넘어가자.

void Blaze::DenormalizeHandLandmarksForBoneLocation(std::vector<cv::Mat> imgs_landmarks)

{

for (int i = 0; i < imgs_landmarks.size(); i++)

{

cv::Mat landmarks = imgs_landmarks.at(i);

cv::Mat squeezed = landmarks.reshape(0, landmarks.size[1]);

bool isLeft = IsLeftHand(squeezed);

//std::cout << "squeezed size: " << squeezed.size[0] << "," << squeezed.size[1] << std::endl;

for (int j = 0; j < squeezed.size[0]; j++)

{

squeezed.at<float>(j, 0) = (squeezed.at<float>(j, 1) - squeezed.at<float>(0, 1)) * skeletonXRatio;

squeezed.at<float>(j, 1) = (squeezed.at<float>(j, 0) - 0.5) * skeletonYRatio * -1;

squeezed.at<float>(j, 2) = squeezed.at<float>(j, 2) * skeletonYRatio * -1;

}

if (isLeft)

squeezed.copyTo(handLeft);

else

squeezed.copyTo(handRight);

}

}

bool Blaze::IsLeftHand(cv::Mat normalizedLandmarks)

{

if (normalizedLandmarks.at<float>(4, 0) > normalizedLandmarks.at<float>(20, 0))

return true;

return false;

}

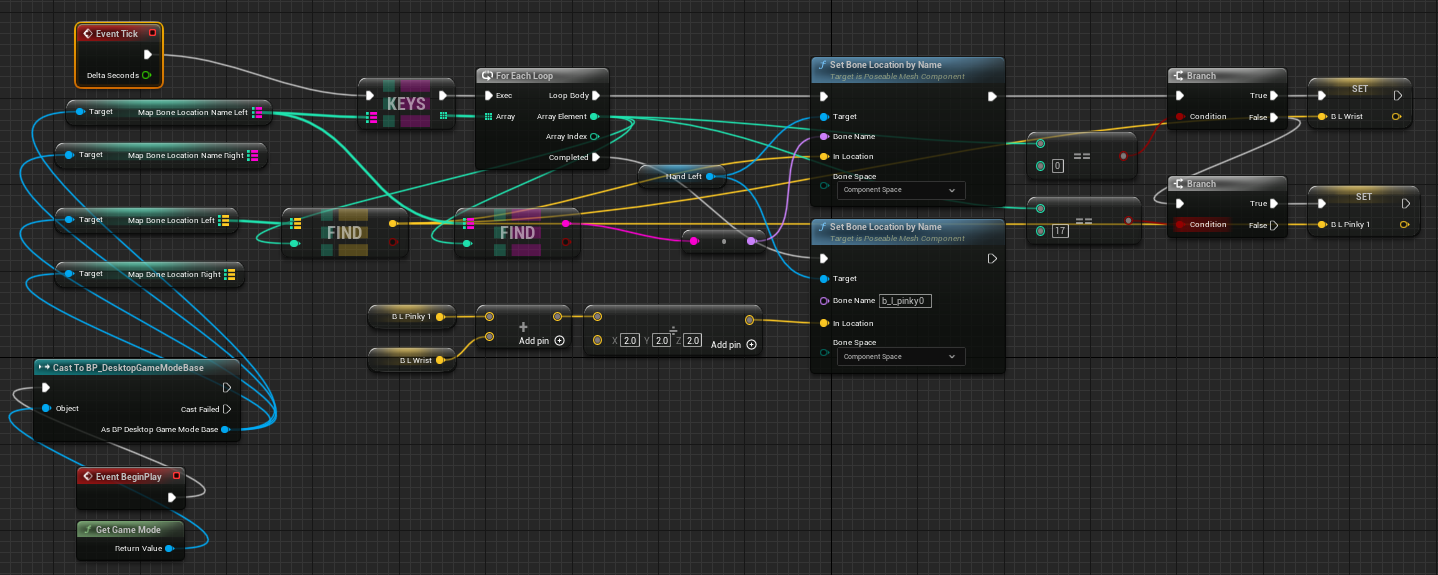

근데 언리얼에선 cv::Mat을 가져올 방법도없으니 대신

벡터맵 사용

데스크탑게임모드베이스에 이런식으로 추가

// bone location

UPROPERTY(BlueprintReadWrite, Category = "LocationMap")

TMap<int32, FVector> MapBoneLocationLeft;

UPROPERTY(BlueprintReadWrite, Category = "LocationMap")

TMap<int32, FVector> MapBoneLocationRight;

void make_map_for_location();

};

void ADesktopGameModeBase::make_map_for_location()

{

cv::Mat HandLeftLocMat = blaze.handLeft;

cv::Mat HandRightLocMat = blaze.handRight;

for (int j = 0; j < HandLeftLocMat.size[0]; j++)

{

FVector vec(HandLeftLocMat.at<float>(j, 0), HandLeftLocMat.at<float>(j, 1), HandLeftLocMat.at<float>(j, 2));

MapBoneLocationLeft.Add(j, vec);

}

for (int j = 0; j < HandRightLocMat.size[0]; j++)

{

FVector vec(HandRightLocMat.at<float>(j, 0), HandRightLocMat.at<float>(j, 1), HandRightLocMat.at<float>(j, 2));

MapBoneLocationRight.Add(j, vec);

}

}

리드프레임에서 로테이터 주석하고 로케이션 맵만들기 추가

//make_map_for_rotators(denorm_imgs_landmarks);

make_map_for_location();

맵 차제를 못가져오길래

따로 맵을 생성해놓고

맵값 갱신하는 식으로 수정

// bone location

UPROPERTY(BlueprintReadWrite, Category = "LocationMap")

TMap<int32, FVector> MapBoneLocationLeft;

UPROPERTY(BlueprintReadWrite, Category = "LocationMap")

TMap<int32, FVector> MapBoneLocationRight;

void set_map_for_location();

void make_map_for_location();

};

void ADesktopGameModeBase::set_map_for_location()

{

cv::Mat HandLeftLocMat = blaze.handLeft;

cv::Mat HandRightLocMat = blaze.handRight;

for (int j = 0; j < HandLeftLocMat.size[0]; j++)

{

FVector vec(HandLeftLocMat.at<float>(j, 0), HandLeftLocMat.at<float>(j, 1), HandLeftLocMat.at<float>(j, 2));

MapBoneLocationLeft.Add(j, vec);

}

for (int j = 0; j < HandRightLocMat.size[0]; j++)

{

FVector vec(HandRightLocMat.at<float>(j, 0), HandRightLocMat.at<float>(j, 1), HandRightLocMat.at<float>(j, 2));

MapBoneLocationRight.Add(j, vec);

}

}

void ADesktopGameModeBase::make_map_for_location()

{

for (int j = 0; j < 21; j++)

{

MapBoneLocationLeft.Add(j, FVector(0, 0, 0));

MapBoneLocationRight.Add(j, FVector(0, 0, 0));

}

}

값은 출력이 되지만 0,0,0만 나온다.

행렬 값을 확인해보니 둘다 제대로 가져오지 못하는 상황

void ADesktopGameModeBase::set_map_for_location()

{

cv::Mat HandLeftLocMat = blaze.handLeft;

cv::Mat HandRightLocMat = blaze.handRight;

UE_LOG(LogTemp, Log, TEXT("rows : %d, cols : %df"), HandLeftLocMat.size[0], HandLeftLocMat.size[1]);

왜 저런문제가 있나 찾아보다가

추론 결과가 아까 썻던 정규화 랜드마크랑 좀다른식으로 나왔다.

아무래도 저장해둔 랜드마크가 오른손이었나보다.

import cv2

import time

import numpy as np

import traceback

def get_color_filtered_boxes(image):

# 이미지를 HSV 색 공간으로 변환

hsv_image = cv2.cvtColor(image, cv2.COLOR_BGR2HSV)

# 살색 영역을 마스크로 만들기

skin_mask = cv2.inRange(hsv_image, lower_skin, upper_skin)

# 모폴로지 연산을 위한 구조 요소 생성

kernel = cv2.getStructuringElement(cv2.MORPH_ELLIPSE, (9, 9))

# 모폴로지 열림 연산 적용

skin_mask = cv2.morphologyEx(skin_mask, cv2.MORPH_OPEN, kernel)

# 마스크를 이용하여 살색 영역 추출

skin_image = cv2.bitwise_and(image, image, mask=skin_mask)

# 살색 영역에 대한 바운딩 박스 추출

contours, _ = cv2.findContours(skin_mask, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

bounding_boxes = [cv2.boundingRect(cnt) for cnt in contours]

# 크기가 작은 박스와 큰 박스 제거

color_boxes = []

for (x, y, w, h) in bounding_boxes:

if w * h > 100 * 100:

# 약간 박스 더크게

#color_boxes.append((x - 30, y - 30, w + 60, h + 60))

center_x = int((x + x + w) / 2)

center_y = int((y + y + h) / 2)

large = 0

if w > h:

large = w

if w < h:

large = h

large = int(large * 0.7)

color_boxes.append((center_x - large, center_y - large, 2 * large, 2 * large))

return color_boxes

def landmark_inference(img):

#tensor = img / 127.5 - 1.0

tensor = img / 256

blob = cv2.dnn.blobFromImage(tensor.astype(np.float32), swapRB=False, crop=False)

net.setInput(blob)

preds = net.forward(outNames)

return preds

def denormalize_landmarks(landmarks):

landmarks[:,:,:2] *= 256

return landmarks

def draw_landmarks(img, points, connections=[], color=(0, 255, 0), size=2):

points = points[:,:2]

for point in points:

x, y = point

x, y = int(x), int(y)

cv2.circle(img, (x, y), size, color, thickness=size)

for connection in connections:

x0, y0 = points[connection[0]]

x1, y1 = points[connection[1]]

x0, y0 = int(x0), int(y0)

x1, y1 = int(x1), int(y1)

cv2.line(img, (x0, y0), (x1, y1), (0,0,0), size)

HAND_CONNECTIONS = [

(0, 1), (1, 2), (2, 3), (3, 4),

(5, 6), (6, 7), (7, 8),

(9, 10), (10, 11), (11, 12),

(13, 14), (14, 15), (15, 16),

(17, 18), (18, 19), (19, 20),

(0, 5), (5, 9), (9, 13), (13, 17), (0, 17)

]

# 살색 영역의 범위 지정 (HSV 색 공간)

lower_skin = np.array([0, 20, 70], dtype=np.uint8)

upper_skin = np.array([20, 255, 255], dtype=np.uint8)

img_width = 640

img_height = 480

lm_infer_width = 256

lm_infer_height = 256

net = cv2.dnn.readNet('blazehand.onnx')

#net = cv2.dnn.readNet('hand_landmark.onnx')

outNames = net.getUnconnectedOutLayersNames()

print(outNames)

cap = cv2.VideoCapture(0)

norm_landmarks = None

while True:

time_start = time.time()

try:

roi = None

ret, frame = cap.read()

frame = cv2.resize(frame, (img_width, img_height))

skin_image = frame.copy()

# 크기가 작은 박스와 큰 박스 제거

color_boxes = get_color_filtered_boxes(skin_image)

# 바운딩 박스를 이미지에 그리기

for (x, y, w, h) in color_boxes:

cv2.rectangle(skin_image, (x, y), (x + w, y + h), (0, 255, 0), 2)

for idx, color_box in enumerate(color_boxes):

x, y, w, h = color_box

cbox_ratio_width = w / lm_infer_width

cbox_ratio_height = h / lm_infer_height

roi = frame[y:y+h, x:x+w]

roi = cv2.resize(roi, (lm_infer_width, lm_infer_height))

lm_input = cv2.cvtColor(roi, cv2.COLOR_BGR2RGB)

preds = landmark_inference(lm_input)

landmarks = preds[2]

flag = preds[0]

norm_landmarks = landmarks.copy()

denorm_landmarks = denormalize_landmarks(landmarks)

for i in range(len(flag)):

landmark, flag = denorm_landmarks[i], flag[i]

if flag>.5:

draw_landmarks(roi, landmark[:,:2], HAND_CONNECTIONS, size=2)

if cv2.waitKey(1) == ord('q'):

break

time_cur = time.time()

cv2.putText(frame, f"time spend: {time_cur - time_start}", (0, 50), cv2.FONT_HERSHEY_SIMPLEX, 2, (125, 125, 125), 2)

#frame = cv2.resize(frame, (320, 240))

if roi is not None:

cv2.imshow('roi', roi)

cv2.imshow('Camera Streaming', frame)

cv2.imshow('Skin Extraction', skin_image)

except Exception as e:

traceback.print_exc()

cap.release()

cv2.destroyAllWindows()

y축 뒤집힌건 그렇다쳐도

엄지가 작은데가있다.

|

|

오른손의 경우 반대로 엄지가 오른쪽에감

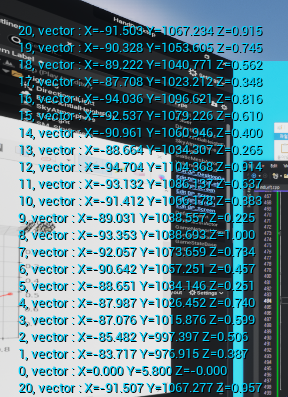

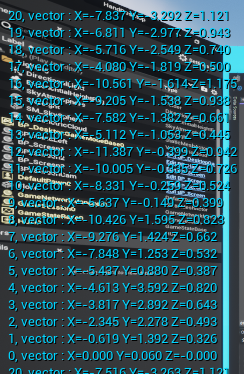

좌우 오계산한거 해결한건 좋은데 값이 좀이상하게 나온다.

반정규화 전에는 분명 0 ~ 1로 출력되던 값이 왜이렇게 됫을까

싶었는데 파이썬 넘파이로 행렬 연산으로 한번에 처리한것과 다르게

단순 루프로 하면서 서로 교차하는 부분이 문제된듯함

for (int j = 0; j < squeezed_for_bone.size[0]; j++)

{

squeezed_for_bone.at<float>(j, 0) = (squeezed_for_bone.at<float>(j, 1) - squeezed_for_bone.at<float>(0, 1)) * skeletonXRatio;

squeezed_for_bone.at<float>(j, 1) = (squeezed_for_bone.at<float>(j, 0) - 0.5) * skeletonYRatio * -1;

squeezed_for_bone.at<float>(j, 2) = squeezed_for_bone.at<float>(j, 2) * skeletonYRatio * -1;

UE_LOG(LogTemp, Log, TEXT("squeezed_for_bone %d : %f, %f, %f"), j, squeezed_for_bone.at<float>(j, 1), squeezed_for_bone.at<float>(j, 2), squeezed_for_bone.at<float>(j, 3));

}

기준 행렬을 따로 빼서 쓰면

for (int j = 0; j < squeezed_for_bone.size[0]; j++)

{

squeezed_for_bone.at<float>(j, 0) = (squeezed_for_origin.at<float>(j, 1) - squeezed_for_origin.at<float>(0, 1)) * skeletonXRatio;

squeezed_for_bone.at<float>(j, 1) = (squeezed_for_origin.at<float>(j, 0) - 0.5) * skeletonYRatio * -1;

squeezed_for_bone.at<float>(j, 2) = squeezed_for_origin.at<float>(j, 2) * skeletonYRatio * -1;

UE_LOG(LogTemp, Log, TEXT("squeezed_for_bone %d : %f, %f, %f"), j, squeezed_for_bone.at<float>(j, 1), squeezed_for_bone.at<float>(j, 2), squeezed_for_bone.at<float>(j, 3));

}

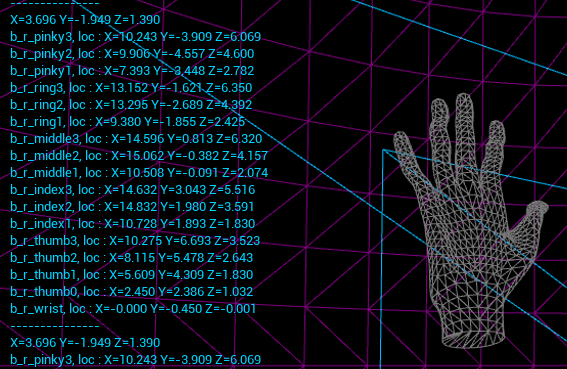

잘 나온것 같지만

xy값 부호가 잘못된것처럼보인다

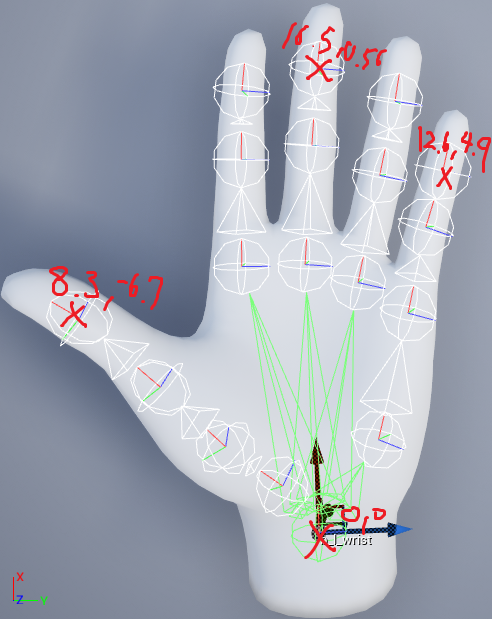

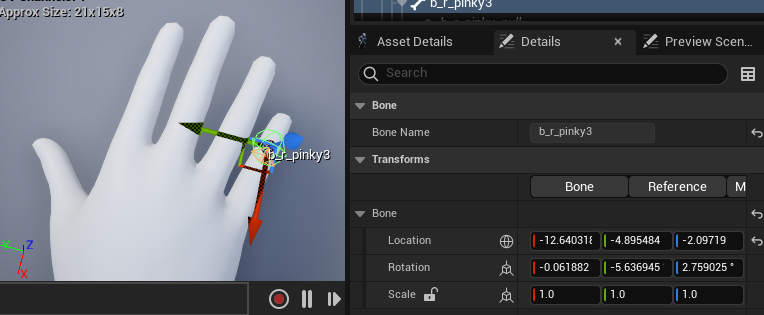

컴포넌트 좌표계상에서

검지 끝은 20번으로 12.6, 4.9

중지 끝은 16번으로 16.5, 0.56

엄지 끝은 4번으로 8.3, -6.7

이나

아래 얻은 반정규화 좌표는 으로 xy둘다 뒤집어진 상태

검지 끝 20번 -7.8, -3.3

중지 끝 16번 -10, -1.6

엄지 끝 4번 -4.6, 3.6

x좌표는 아까 오른손 데이터를가지고 왼손으로 정리한게 문젠거같고

y축은 왜뒤집힌건지 모르겟네

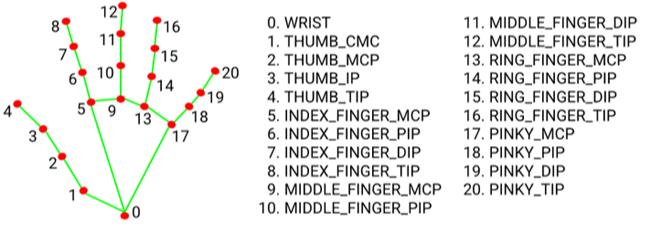

이제 본위치를 설정해야되는데 본 갯수와 랜드마크 수가 맞지않는다.

랜드마크는 21개

본은 4 + 3 + 3 + 3 + 4 + 1 = 18개로 갯수 매칭이 안된다.

일단

매칭할만한것만 정리하면

| 블라즈핸드 | 본 | 블라즈핸드 | 본 |

| 5 | b_l_index1 | 17 | b_l_pinky1 |

| 6 | b_l_index2 | 18 | b_l_pinky2 |

| 7 | b_l_index3 | 19 | b_l_pinky3 |

| 9 | b_l_middle1 | 1 | b_l_thumb0 |

| 10 | b_l_middle2 | 2 | b_l_thumb1 |

| 11 | b_l_middle3 | 3 | b_l_thumb2 |

| 13 | b_l_ring1 | 4 | b_l_thumb3 |

| 14 | b_l_ring2 | 0 | b_l_wrist |

| 15 | b_l_ring3 |

로 정리할듯하나

b_l_pinky0는 pinky1과 손목 사이로 만들어야할듯.

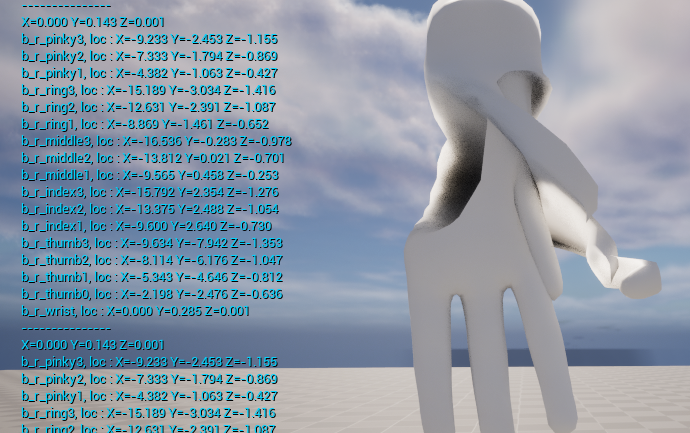

대충 동작은 하는대 크기가 좀 아쉬워서 배율 추가

위치는 잘 매칭시키긴한거같은데 rot 설정이 없어서 손이 회전없이 어그러진건 좀 아쉽

손등 정면에서보면 그래도 나쁘진 않은데

옆에서보면 아쉽

이제 화면에서 이동 추가하자

왼손 처럼 오른손 클래스 생성

#pragma once

#include "Components/PoseableMeshComponent.h"

#include "CoreMinimal.h"

#include "GameFramework/Actor.h"

#include "HandRight.generated.h"

UCLASS()

class HANDDESKTOP_API AHandRight : public AActor

{

GENERATED_BODY()

public:

// Sets default values for this actor's properties

AHandRight();

protected:

// Called when the game starts or when spawned

virtual void BeginPlay() override;

public:

// Called every frame

virtual void Tick(float DeltaTime) override;

UPROPERTY(EditAnywhere, BlueprintReadWrite, Category = PoseableMesh)

UPoseableMeshComponent* HanRight;

};#include "HandRight.h"

// Sets default values

AHandRight::AHandRight()

{

// Set this actor to call Tick() every frame. You can turn this off to improve performance if you don't need it.

PrimaryActorTick.bCanEverTick = true;

// 스켈레탈 메시 컴포넌트 생성 및 부착

ConstructorHelpers::FObjectFinder<USkeletalMesh> TempHandMesh(

TEXT("SkeletalMesh'/Game/MyContent/Hand_R.Hand_R'")

);

HanRight = CreateDefaultSubobject<UPoseableMeshComponent>(TEXT("HandRight"));

HanRight->SetSkeletalMesh(TempHandMesh.Object);

HanRight->SetRelativeLocation(FVector(0, 0, 0));

}

// Called when the game starts or when spawned

void AHandRight::BeginPlay()

{

Super::BeginPlay();

}

// Called every frame

void AHandRight::Tick(float DeltaTime)

{

Super::Tick(DeltaTime);

}

BP 오른손 생성후 게임 내 배치

오른손도 이동하도록 bp랑 코드도 수정했는데

잘 움직이긴하지만 방향이 반대로됨

만든 반정규화 코드가 왼손 기준으로 만듬

오른손이랑 왼손 모델은 좌우대칭뿐만 아니라 상하반전도 되어있기때문

xy부호만 반대로하면 될줄알았는데

정리좀 하고싶은데 잘안되네

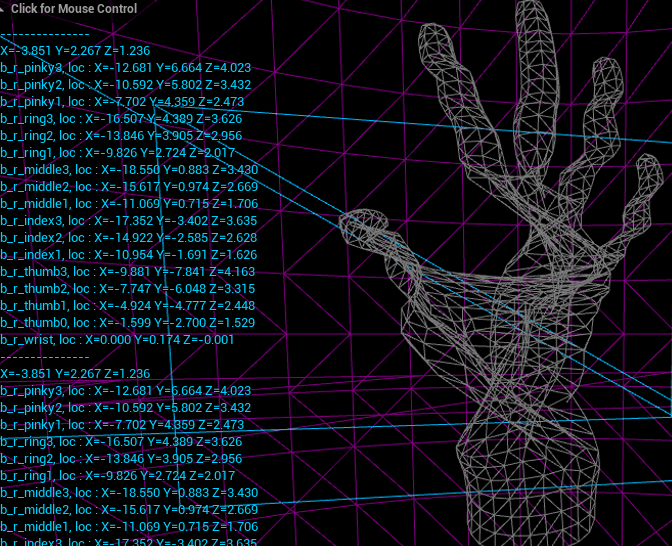

왼손은 위를 향하고 있는 상태며 좌표는 이랬는데

오른손은 손바닥이 아래를 향하면서 원점 기준으로 반대로 되어있다.

블라즈핸드 추론 결과는 x축 반전만 되어있는데

모델은 x,y축 둘다 반전되어있으니 곤란하다.

|

|

| 왼손 추론결과 | 오른손 추론결과 |

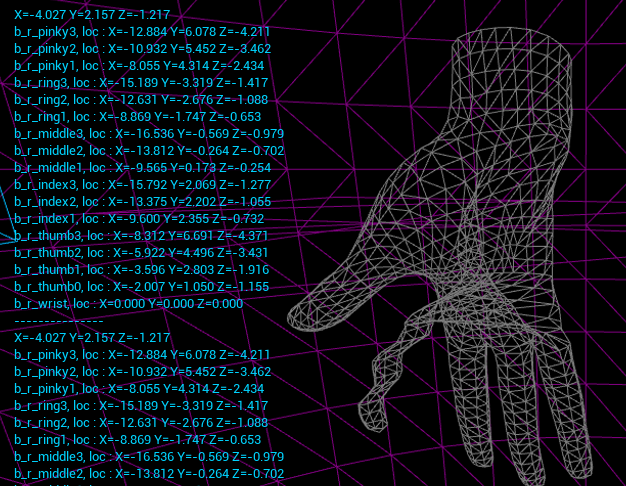

근데 보다보니

손 대부분은 정상적으로 나오지만

뼈하나가 엉뚱한데 간게 아닐까싶더라

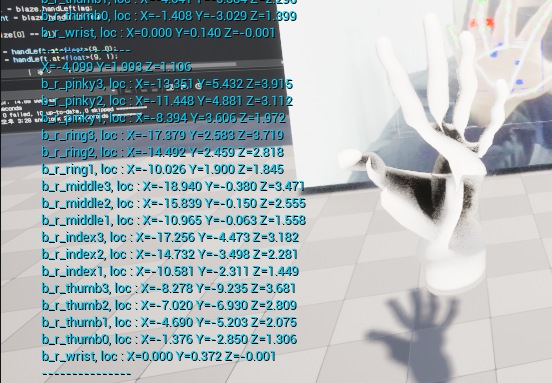

전체 뼈 컴포넌트 공간 좌표 찍어봤는데

의심되던 pinky 0 도 정상이고

f1로보면 왼손은 이쁘게 잘나오는데

아무리봐도

index1이 이상한대 가서그런거같은데

따로봐야될거같아서 0~4까지만 로케이션 설정했을때 결과

17 ~ 19 핑키123 만 걸었을때 결과

이렇게 보니 y좌표 자체가 반대로되서 이런듯

이제 손이 구불구불하지만 제대로 나온다.

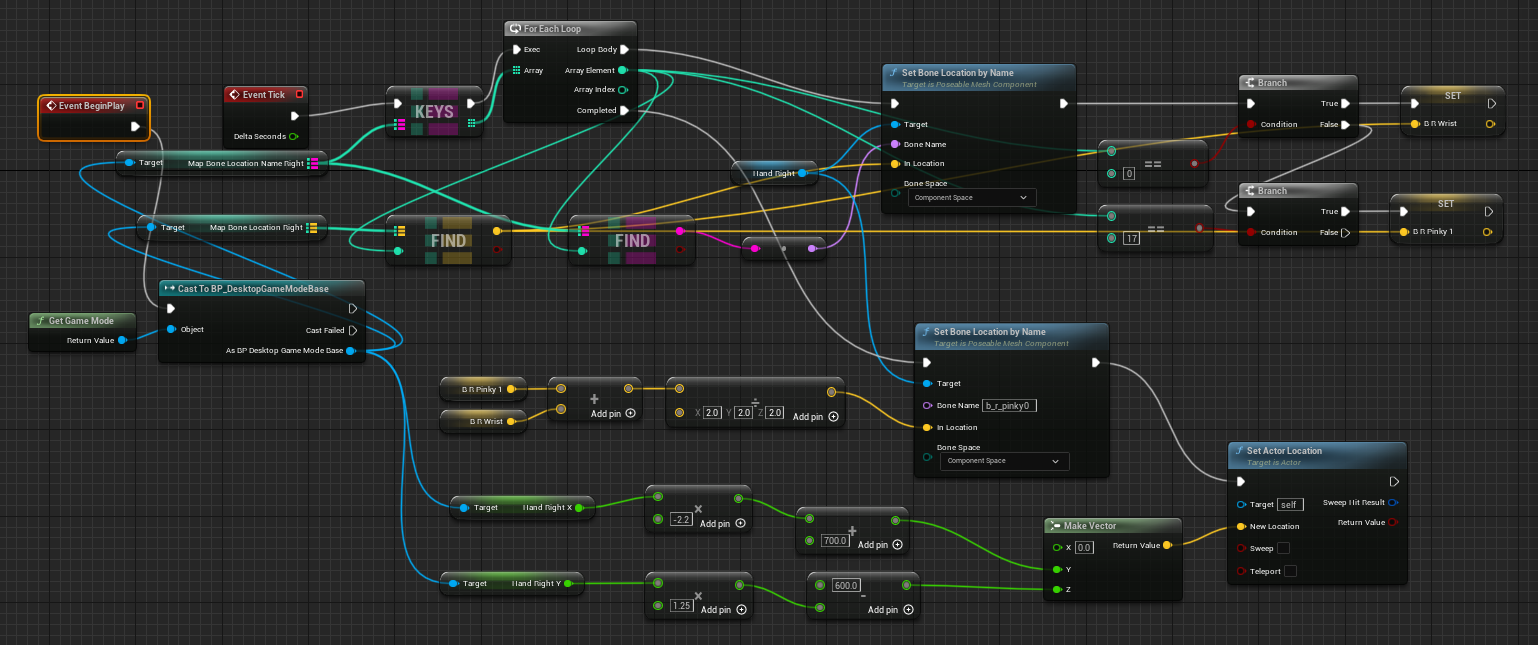

정리된 블루프린트

컴포넌트 공간에서만 위치잡도록했으니

월드공간에서 방향만맞춰주자

인게임에서 제어결과

게임모드

// Fill out your copyright notice in the Description page of Project Settings.

#pragma once

#include "Blaze.h"

#include "Windows/AllowWindowsPlatformTypes.h"

#include <Windows.h>

#include "Windows/HideWindowsPlatformTypes.h"

#include "PreOpenCVHeaders.h"

#include <opencv2/opencv.hpp>

#include "PostOpenCVHeaders.h"

#include "CoreMinimal.h"

#include "GameFramework/GameModeBase.h"

#include "DesktopGameModeBase.generated.h"

/**

*

*/

UCLASS()

class HANDDESKTOP_API ADesktopGameModeBase : public AGameModeBase

{

GENERATED_BODY()

protected:

// Called when the game starts or when spawned

virtual void BeginPlay() override;

public:

UFUNCTION(BlueprintCallable)

void ReadFrame();

int monitorWidth = 1920;

int monitorHeight = 1080;

UPROPERTY(EditAnywhere, BlueprintReadWrite)

UTexture2D* imageTextureScreen1;

UPROPERTY(EditAnywhere, BlueprintReadWrite)

UTexture2D* imageTextureScreen2;

UPROPERTY(EditAnywhere, BlueprintReadWrite)

UTexture2D* imageTextureScreen3;

cv::Mat imageScreen1;

cv::Mat imageScreen2;

cv::Mat imageScreen3;

void ScreensToCVMats();

void CVMatsToTextures();

int webcamWidth = 640;

int webcamHeight = 480;

cv::VideoCapture capture;

cv::Mat webcamImage;

UPROPERTY(EditAnywhere, BlueprintReadWrite)

UTexture2D* webcamTexture;

void MatToTexture2D(const cv::Mat InMat);

//var and functions with blaze

Blaze blaze;

cv::Mat img256;

cv::Mat img128;

float scale;

cv::Scalar pad;

// vars and funcs for rotator

int hand_conns_indexes[14] = {2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15};

void get_pitch_yaw(cv::Point3f pt_start, cv::Point3f pt_end, float& pitch, float& yaw);

//void calculateRotation(const cv::Point3f& pt1, const cv::Point3f& pt2, float& roll, float& pitch, float& yaw);

void make_map_for_rotators(std::vector<cv::Mat> denorm_imgs_landmarks);

void make_map_bone();

UPROPERTY(BlueprintReadWrite, Category = "RotatorMap")

TMap<int32, float> MapRoll;

UPROPERTY(BlueprintReadWrite, Category="RotatorMap")

TMap<int32, float> MapPitch;

UPROPERTY(BlueprintReadWrite, Category = "RotatorMap")

TMap<int32, float> MapYaw;

UPROPERTY(BlueprintReadWrite, Category = "RotatorMap")

TMap<int32, FString> MapBoneLeft;

UPROPERTY(BlueprintReadWrite, Category = "RotatorMap")

TMap<int32, FString> MapBoneRight;

UPROPERTY(BlueprintReadWrite, Category = "HandCoord")

float HandLeftX;

UPROPERTY(BlueprintReadWrite, Category = "HandCoord")

float HandLeftY;

// bone location

UPROPERTY(BlueprintReadWrite, Category = "LocationMap")

TMap<int32, FVector> MapBoneLocationLeft;

UPROPERTY(BlueprintReadWrite, Category = "LocationMap")

TMap<int32, FVector> MapBoneLocationRight;

void set_map_for_location();

void make_map_for_location();

UPROPERTY(BlueprintReadWrite, Category = "LocationMap")

TMap<int32, FString> MapBoneLocationNameLeft;

UPROPERTY(BlueprintReadWrite, Category = "LocationMap")

TMap<int32, FString> MapBoneLocationNameRight;

void make_map_bone_name();

void set_hand_pos_world();

UPROPERTY(BlueprintReadWrite, Category = "HandCoord")

float HandRightX;

UPROPERTY(BlueprintReadWrite, Category = "HandCoord")

float HandRightY;

};// Fill out your copyright notice in the Description page of Project Settings.

#include "DesktopGameModeBase.h"

void ADesktopGameModeBase::BeginPlay()

{

Super::BeginPlay();

blaze = Blaze();

capture = cv::VideoCapture(0);

if (!capture.isOpened())

{

UE_LOG(LogTemp, Log, TEXT("Open Webcam failed"));

return;

}

else

{

UE_LOG(LogTemp, Log, TEXT("Open Webcam Success"));

}

capture.set(cv::CAP_PROP_FRAME_WIDTH, webcamWidth);

capture.set(cv::CAP_PROP_FRAME_HEIGHT, webcamHeight);

webcamTexture = UTexture2D::CreateTransient(monitorWidth, monitorHeight, PF_B8G8R8A8);

imageScreen1 = cv::Mat(monitorHeight, monitorWidth, CV_8UC4);

imageScreen2 = cv::Mat(monitorHeight, monitorWidth, CV_8UC4);

imageScreen3 = cv::Mat(monitorHeight, monitorWidth, CV_8UC4);

imageTextureScreen1 = UTexture2D::CreateTransient(monitorWidth, monitorHeight, PF_B8G8R8A8);

imageTextureScreen2 = UTexture2D::CreateTransient(monitorWidth, monitorHeight, PF_B8G8R8A8);

imageTextureScreen3 = UTexture2D::CreateTransient(monitorWidth, monitorHeight, PF_B8G8R8A8);

make_map_bone();

make_map_for_location();

make_map_bone_name();

}

void ADesktopGameModeBase::ReadFrame()

{

if (!capture.isOpened())

{

return;

}

capture.read(webcamImage);

/*

get filtered detections

*/

blaze.ResizeAndPad(webcamImage, img256, img128, scale, pad);

//UE_LOG(LogTemp, Log, TEXT("scale value: %f, pad value: (%f, %f)"), scale, pad[0], pad[1]);

std::vector<Blaze::PalmDetection> normDets = blaze.PredictPalmDetections(img128);

std::vector<Blaze::PalmDetection> denormDets = blaze.DenormalizePalmDetections(normDets, webcamWidth, webcamHeight, pad);

std::vector<Blaze::PalmDetection> filteredDets = blaze.FilteringDets(denormDets, webcamWidth, webcamHeight);

std::vector<cv::Rect> handRects = blaze.convertHandRects(filteredDets);

std::vector<cv::Mat> handImgs;

blaze.GetHandImages(webcamImage, handRects, handImgs);

std::vector<cv::Mat> imgs_landmarks = blaze.PredictHandDetections(handImgs);

std::vector<cv::Mat> denorm_imgs_landmarks = blaze.DenormalizeHandLandmarksForBoneLocation(imgs_landmarks, handRects);

//make_map_for_rotators(denorm_imgs_landmarks);

set_map_for_location();

set_hand_pos_world();

//draw hand rects/ plam detection/ dets info/ hand detection

blaze.DrawRects(webcamImage, handRects);

blaze.DrawPalmDetections(webcamImage, filteredDets);

blaze.DrawDetsInfo(webcamImage, filteredDets, normDets, denormDets);

blaze.DrawHandDetections(webcamImage, denorm_imgs_landmarks);

//cv::mat to utexture2d

MatToTexture2D(webcamImage);

/*

모니터 시각화

*/

ScreensToCVMats();

CVMatsToTextures();

}

void ADesktopGameModeBase::MatToTexture2D(const cv::Mat InMat)

{

if (InMat.type() == CV_8UC3)//example for pre-conversion of Mat

{

cv::Mat resizedImage;

cv::resize(InMat, resizedImage, cv::Size(monitorWidth, monitorHeight));

cv::Mat bgraImage;

//if the Mat is in BGR space, convert it to BGRA. There is no three channel texture in UE (at least with eight bit)

cv::cvtColor(resizedImage, bgraImage, cv::COLOR_BGR2BGRA);

//actually copy the data to the new texture

FTexture2DMipMap& Mip = webcamTexture->GetPlatformData()->Mips[0];

void* Data = Mip.BulkData.Lock(LOCK_READ_WRITE);//lock the texture data

FMemory::Memcpy(Data, bgraImage.data, bgraImage.total() * bgraImage.elemSize());//copy the data

Mip.BulkData.Unlock();

webcamTexture->PostEditChange();

webcamTexture->UpdateResource();

}

else if (InMat.type() == CV_8UC4)

{

//actually copy the data to the new texture

FTexture2DMipMap& Mip = webcamTexture->GetPlatformData()->Mips[0];

void* Data = Mip.BulkData.Lock(LOCK_READ_WRITE);//lock the texture data

FMemory::Memcpy(Data, InMat.data, InMat.total() * InMat.elemSize());//copy the data

Mip.BulkData.Unlock();

webcamTexture->PostEditChange();

webcamTexture->UpdateResource();

}

//if the texture hasnt the right pixel format, abort.

webcamTexture->PostEditChange();

webcamTexture->UpdateResource();

}

void ADesktopGameModeBase::ScreensToCVMats()

{

HDC hScreenDC = GetDC(NULL);

HDC hMemoryDC = CreateCompatibleDC(hScreenDC);

int screenWidth = GetDeviceCaps(hScreenDC, HORZRES);

int screenHeight = GetDeviceCaps(hScreenDC, VERTRES);

HBITMAP hBitmap = CreateCompatibleBitmap(hScreenDC, screenWidth, screenHeight);

HBITMAP hOldBitmap = (HBITMAP)SelectObject(hMemoryDC, hBitmap);

//screen 1

BitBlt(hMemoryDC, 0, 0, screenWidth, screenHeight, hScreenDC, 0, 0, SRCCOPY);

GetBitmapBits(hBitmap, imageScreen1.total() * imageScreen1.elemSize(), imageScreen1.data);

//screen 2

BitBlt(hMemoryDC, 0, 0, screenWidth, screenHeight, hScreenDC, 1920, 0, SRCCOPY);

GetBitmapBits(hBitmap, imageScreen2.total() * imageScreen2.elemSize(), imageScreen2.data);

//screen 3

BitBlt(hMemoryDC, 0, 0, screenWidth, screenHeight, hScreenDC, 3840, 0, SRCCOPY);

GetBitmapBits(hBitmap, imageScreen3.total() * imageScreen3.elemSize(), imageScreen3.data);

SelectObject(hMemoryDC, hOldBitmap);

DeleteDC(hScreenDC);

DeleteDC(hMemoryDC);

DeleteObject(hBitmap);

DeleteObject(hOldBitmap);

}

void ADesktopGameModeBase::CVMatsToTextures()

{

for (int i = 0; i < 3; i++)

{

if (i == 0)

{

FTexture2DMipMap& Mip = imageTextureScreen1->GetPlatformData()->Mips[0];

void* Data = Mip.BulkData.Lock(LOCK_READ_WRITE);//lock the texture data

FMemory::Memcpy(Data, imageScreen1.data, imageScreen1.total() * imageScreen1.elemSize());//copy the data

Mip.BulkData.Unlock();

imageTextureScreen1->PostEditChange();

imageTextureScreen1->UpdateResource();

}

else if (i == 1)

{

FTexture2DMipMap& Mip = imageTextureScreen2->GetPlatformData()->Mips[0];

void* Data = Mip.BulkData.Lock(LOCK_READ_WRITE);//lock the texture data

FMemory::Memcpy(Data, imageScreen2.data, imageScreen2.total() * imageScreen2.elemSize());//copy the data

Mip.BulkData.Unlock();

imageTextureScreen2->PostEditChange();

imageTextureScreen2->UpdateResource();

}

else if (i == 2)

{

FTexture2DMipMap& Mip = imageTextureScreen3->GetPlatformData()->Mips[0];

void* Data = Mip.BulkData.Lock(LOCK_READ_WRITE);//lock the texture data

FMemory::Memcpy(Data, imageScreen3.data, imageScreen3.total() * imageScreen3.elemSize());//copy the data

Mip.BulkData.Unlock();

imageTextureScreen3->PostEditChange();

imageTextureScreen3->UpdateResource();

}

}

}

void ADesktopGameModeBase::get_pitch_yaw(cv::Point3f pt_start, cv::Point3f pt_end, float& pitch, float& yaw) {

float dx = pt_end.x - pt_start.x;

float dy = pt_end.y - pt_start.y;

dy *= -1;

yaw = std::atan2(dy, dx) * 180 / CV_PI;

yaw = yaw - 90;

float dz = pt_end.z - pt_start.z;

float xy_norm = std::sqrt(dx * dx + dy * dy);

pitch = std::atan2(dz, xy_norm) * 180 / CV_PI;

pitch *= -1;

}

/*

void ADesktopGameModeBase::calculateRotation(const cv::Point3f& pt1, const cv::Point3f& pt2, float& roll, float& pitch, float& yaw) {

cv::Point3f vec = pt2 - pt1; // 두 벡터를 연결하는 벡터 계산

roll = atan2(vec.y, vec.x) * 180 / CV_PI; // 롤(Roll) 회전 계산

pitch = asin(vec.z / cv::norm(vec)) * 180 / CV_PI; // 피치(Pitch) 회전 계산

cv::Point2f vecXY(vec.x, vec.y); // xy 평면으로 투영

yaw = (atan2(vec.y, vec.x) - atan2(vec.z, cv::norm(vecXY))) * 180 / CV_PI; // 요(Yaw) 회전 계산

}

*/

void ADesktopGameModeBase::make_map_for_rotators(std::vector<cv::Mat> denorm_imgs_landmarks)

{

for (auto& denorm_landmarks : denorm_imgs_landmarks)

{

std::vector<std::array<int, 2>> HAND_CONNECTIONS = blaze.HAND_CONNECTIONS;

for (auto& hand_conns_index : hand_conns_indexes)

{

std::array<int, 2> hand_conns = HAND_CONNECTIONS.at(hand_conns_index);

float roll, pitch, yaw;

cv::Point3f pt_start, pt_end;

pt_start.x = denorm_landmarks.at<float>(hand_conns.at(0), 0);

pt_start.y = denorm_landmarks.at<float>(hand_conns.at(0), 1);

pt_start.z = denorm_landmarks.at<float>(hand_conns.at(0), 2);

pt_end.x = denorm_landmarks.at<float>(hand_conns.at(1), 0);

pt_end.y = denorm_landmarks.at<float>(hand_conns.at(1), 1);

pt_end.z = denorm_landmarks.at<float>(hand_conns.at(1), 2);

//calculateRotation(pt_start, pt_end, roll, pitch, yaw);

get_pitch_yaw(pt_start, pt_end, pitch, yaw);

MapRoll.Add(hand_conns_index, roll);

MapPitch.Add(hand_conns_index, pitch);

MapYaw.Add(hand_conns_index, yaw);

}

HandLeftX = denorm_landmarks.at<float>(9, 0);

HandLeftY = denorm_landmarks.at<float>(9, 1);

}

}

/*

std::vector<std::array<int, 2>> HAND_CONNECTIONS = {

{0, 1}, {1, 2}, {2, 3}, {3, 4},

{5, 6}, {6, 7}, {7, 8},

{9, 10}, {10, 11}, {11, 12},

{13, 14}, {14, 15}, {15, 16},

{17, 18}, {18, 19}, {19, 20},

{0, 5}, {5, 9}, {9, 13}, {13, 17}, {0, 17}

};

*/

void ADesktopGameModeBase::make_map_bone()

{

MapBoneLeft.Add(2, FString("b_l_thumb2"));

MapBoneLeft.Add(3, FString("b_l_thumb3"));

MapBoneLeft.Add(4, FString("b_l_index1"));

MapBoneLeft.Add(5, FString("b_l_index2"));

MapBoneLeft.Add(6, FString("b_l_index3"));

MapBoneLeft.Add(7, FString("b_l_middle1"));

MapBoneLeft.Add(8, FString("b_l_middle2"));

MapBoneLeft.Add(9, FString("b_l_middle3"));

MapBoneLeft.Add(10, FString("b_l_ring1"));

MapBoneLeft.Add(11, FString("b_l_ring2"));

MapBoneLeft.Add(12, FString("b_l_ring3"));

MapBoneLeft.Add(13, FString("b_l_pinky1"));

MapBoneLeft.Add(14, FString("b_l_pinky2"));

MapBoneLeft.Add(15, FString("b_l_pinky3"));

MapBoneRight.Add(2, FString("b_r_thumb2"));

MapBoneRight.Add(3, FString("b_r_thumb3"));

MapBoneRight.Add(4, FString("b_r_index1"));

MapBoneRight.Add(5, FString("b_r_index2"));

MapBoneRight.Add(6, FString("b_r_index3"));

MapBoneRight.Add(7, FString("b_r_middle1"));

MapBoneRight.Add(8, FString("b_r_middle2"));

MapBoneRight.Add(9, FString("b_r_middle3"));

MapBoneRight.Add(10, FString("b_r_ring1"));

MapBoneRight.Add(11, FString("b_r_ring2"));

MapBoneRight.Add(12, FString("b_r_ring3"));

MapBoneRight.Add(13, FString("b_r_pinky1"));

MapBoneRight.Add(14, FString("b_r_pinky2"));

MapBoneRight.Add(15, FString("b_r_pinky3"));

MapBoneLeft.Add(16, FString("b_l_wrist"));

MapBoneLeft.Add(17, FString("b_l_thumb0"));

MapBoneLeft.Add(18, FString("b_l_thumb1"));

MapBoneLeft.Add(19, FString("b_l_pinky0"));

MapBoneRight.Add(16, FString("b_r_wrist"));

MapBoneRight.Add(17, FString("b_r_thumb0"));

MapBoneRight.Add(18, FString("b_r_thumb1"));

MapBoneRight.Add(19, FString("b_r_pinky0"));

}

void ADesktopGameModeBase::set_map_for_location()

{

cv::Mat HandLeftLocMat = blaze.handLeft;

cv::Mat HandRightLocMat = blaze.handRight;

//UE_LOG(LogTemp, Log, TEXT("rows : %d, cols : %d"), HandLeftLocMat.size[0], HandLeftLocMat.size[1]);

for (int j = 0; j < HandLeftLocMat.size[0]; j++)

{

FVector vec(HandLeftLocMat.at<float>(j, 0), HandLeftLocMat.at<float>(j, 1), HandLeftLocMat.at<float>(j, 2));

MapBoneLocationLeft.Add(j, vec);

}

for (int j = 0; j < HandRightLocMat.size[0]; j++)

{

FVector vec(HandRightLocMat.at<float>(j, 0), HandRightLocMat.at<float>(j, 1), HandRightLocMat.at<float>(j, 2));

MapBoneLocationRight.Add(j, vec);

}

//UE_LOG(LogTemp, Log, TEXT("HandRightLocMat.size[0] : %d"), HandRightLocMat.size[0]);

}

void ADesktopGameModeBase::make_map_for_location()

{

for (int j = 0; j < 21; j++)

{

MapBoneLocationLeft.Add(j, FVector(0, 0, 0));

MapBoneLocationRight.Add(j, FVector(0, 0, 0));

}

}

void ADesktopGameModeBase::make_map_bone_name()

{

MapBoneLocationNameLeft.Add(0, FString("b_l_wrist"));

MapBoneLocationNameLeft.Add(1, FString("b_l_thumb0"));

MapBoneLocationNameLeft.Add(2, FString("b_l_thumb1"));

MapBoneLocationNameLeft.Add(3, FString("b_l_thumb2"));

MapBoneLocationNameLeft.Add(4, FString("b_l_thumb3"));

MapBoneLocationNameLeft.Add(5, FString("b_l_index1"));

MapBoneLocationNameLeft.Add(6, FString("b_l_index2"));

MapBoneLocationNameLeft.Add(7, FString("b_l_index3"));

MapBoneLocationNameLeft.Add(9, FString("b_l_middle1"));

MapBoneLocationNameLeft.Add(10, FString("b_l_middle2"));

MapBoneLocationNameLeft.Add(11, FString("b_l_middle3"));

MapBoneLocationNameLeft.Add(13, FString("b_l_ring1"));

MapBoneLocationNameLeft.Add(14, FString("b_l_ring2"));

MapBoneLocationNameLeft.Add(15, FString("b_l_ring3"));

MapBoneLocationNameLeft.Add(17, FString("b_l_pinky1"));

MapBoneLocationNameLeft.Add(18, FString("b_l_pinky2"));

MapBoneLocationNameLeft.Add(19, FString("b_l_pinky3"));

MapBoneLocationNameRight.Add(0, FString("b_r_wrist"));

MapBoneLocationNameRight.Add(1, FString("b_r_thumb0"));

MapBoneLocationNameRight.Add(2, FString("b_r_thumb1"));

MapBoneLocationNameRight.Add(3, FString("b_r_thumb2"));

MapBoneLocationNameRight.Add(4, FString("b_r_thumb3"));

MapBoneLocationNameRight.Add(5, FString("b_r_index1"));

MapBoneLocationNameRight.Add(6, FString("b_r_index2"));

MapBoneLocationNameRight.Add(7, FString("b_r_index3"));

MapBoneLocationNameRight.Add(9, FString("b_r_middle1"));

MapBoneLocationNameRight.Add(10, FString("b_r_middle2"));

MapBoneLocationNameRight.Add(11, FString("b_r_middle3"));

MapBoneLocationNameRight.Add(13, FString("b_r_ring1"));

MapBoneLocationNameRight.Add(14, FString("b_r_ring2"));

MapBoneLocationNameRight.Add(15, FString("b_r_ring3"));

MapBoneLocationNameRight.Add(17, FString("b_r_pinky1"));

MapBoneLocationNameRight.Add(18, FString("b_r_pinky2"));

MapBoneLocationNameRight.Add(19, FString("b_r_pinky3"));

}

void ADesktopGameModeBase::set_hand_pos_world()

{

cv::Mat handLeft = blaze.handLeftImg;

cv::Mat handRight = blaze.handRightImg;

if (handLeft.size[0] == 21)

{

HandLeftX = handLeft.at<float>(9, 0);

HandLeftY = handLeft.at<float>(9, 1);

}

if (handRight.size[0] == 21)

{

HandRightX = handRight.at<float>(9, 0);

HandRightY = handRight.at<float>(9, 1);

}

}

블라즈

// Fill out your copyright notice in the Description page of Project Settings.

#pragma once

#include "PreOpenCVHeaders.h"

#include <opencv2/opencv.hpp>

#include "PostOpenCVHeaders.h"

#include "CoreMinimal.h"

/**

*

*/

class HANDDESKTOP_API Blaze

{

public:

Blaze();

~Blaze();

cv::dnn::Net blazePalm;

cv::dnn::Net blazeHand;

// var and funcs for blazepalm

struct PalmDetection {

float ymin;

float xmin;

float ymax;

float xmax;

cv::Point2d kp_arr[7];

float score;

};

int blazeHandSize = 256;

int blazePalmSize = 128;

float palmMinScoreThresh = 0.7;

float palmMinNMSThresh = 0.4;

int palmMinNumKeyPoints = 7;

void ResizeAndPad(

cv::Mat& srcimg, cv::Mat& img256,

cv::Mat& img128, float& scale, cv::Scalar& pad

);

// funcs for blaze palm

std::vector<PalmDetection> PredictPalmDetections(cv::Mat& img);

PalmDetection GetPalmDetection(cv::Mat regressor, cv::Mat classificator,

int stride, int anchor_count, int column, int row, int anchor, int offset);

float sigmoid(float x);

std::vector<PalmDetection> DenormalizePalmDetections(std::vector<PalmDetection> detections, int width, int height, cv::Scalar pad);

void DrawPalmDetections(cv::Mat& img, std::vector<Blaze::PalmDetection> denormDets);

void DrawDetsInfo(cv::Mat& img, std::vector<Blaze::PalmDetection> filteredDets, std::vector<Blaze::PalmDetection> normDets, std::vector<Blaze::PalmDetection> denormDets);

std::vector<PalmDetection> FilteringDets(std::vector<PalmDetection> detections, int width, int height);

// vars and funcs for blaze hand

std::vector<std::array<int, 2>> HAND_CONNECTIONS = {

{0, 1}, {1, 2}, {2, 3}, {3, 4},

{5, 6}, {6, 7}, {7, 8},

{9, 10}, {10, 11}, {11, 12},

{13, 14}, {14, 15}, {15, 16},

{17, 18}, {18, 19}, {19, 20},

{0, 5}, {5, 9}, {9, 13}, {13, 17}, {0, 17}

};

float blazeHandDScale = 3;

int webcamWidth = 640;

int webcamHeight = 480;

std::vector<cv::Rect> convertHandRects(std::vector<PalmDetection> filteredDets);

void DrawRects(cv::Mat& img, std::vector<cv::Rect> rects);

void GetHandImages(cv::Mat& img, std::vector<cv::Rect> rects, std::vector<cv::Mat>& handImgs);

std::vector<cv::Mat> PredictHandDetections(std::vector<cv::Mat>& imgs);

std::vector<cv::Mat> DenormalizeHandLandmarks(std::vector<cv::Mat> imgs_landmarks, std::vector<cv::Rect> rects);

void DrawHandDetections(cv::Mat img, std::vector<cv::Mat> denorm_imgs_landmarks);

// var, funs for left, right lms and bone location

float skeletonXRatio = 16.5;

float skeletonYRatio = 11.6;

cv::Mat handLeft;

cv::Mat handRight;

cv::Mat handLeftImg;

cv::Mat handRightImg;

std::vector<cv::Mat> DenormalizeHandLandmarksForBoneLocation(std::vector<cv::Mat> imgs_landmarks, std::vector<cv::Rect> rects);

bool IsLeftHand(cv::Mat normalizedLandmarks);

};

// Fill out your copyright notice in the Description page of Project Settings.

#include "Blaze.h"

Blaze::Blaze()

{

this->blazePalm = cv::dnn::readNet("c:/blazepalm_old.onnx");

this->blazeHand = cv::dnn::readNet("c:/blazehand.onnx");

}

Blaze::~Blaze()

{

}

void Blaze::ResizeAndPad(

cv::Mat& srcimg, cv::Mat& img256,

cv::Mat& img128, float& scale, cv::Scalar& pad

)

{

float h1, w1;

int padw, padh;

cv::Size size0 = srcimg.size();

if (size0.height >= size0.width) {

h1 = 256;

w1 = 256 * size0.width / size0.height;

padh = 0;

padw = static_cast<int>(256 - w1);

scale = size0.width / static_cast<float>(w1);

}

else {

h1 = 256 * size0.height / size0.width;

w1 = 256;

padh = static_cast<int>(256 - h1);

padw = 0;

scale = size0.height / static_cast<float>(h1);

}

//UE_LOG(LogTemp, Log, TEXT("scale value: %f, size0.height: %d, size0.width : %d, h1 : %f"), scale, size0.height, size0.width, h1);

int padh1 = static_cast<int>(padh / 2);

int padh2 = static_cast<int>(padh / 2) + static_cast<int>(padh % 2);

int padw1 = static_cast<int>(padw / 2);

int padw2 = static_cast<int>(padw / 2) + static_cast<int>(padw % 2);

pad = cv::Scalar(static_cast<float>(padh1 * scale), static_cast<float>(padw1 * scale));

cv::resize(srcimg, img256, cv::Size(w1, h1));

cv::copyMakeBorder(img256, img256, padh1, padh2, padw1, padw2, cv::BORDER_CONSTANT, cv::Scalar(0, 0, 0));

cv::resize(img256, img128, cv::Size(128, 128));

cv::cvtColor(img256, img256, cv::COLOR_BGR2RGB);

cv::cvtColor(img128, img128, cv::COLOR_BGR2RGB);

}

std::vector<Blaze::PalmDetection> Blaze::PredictPalmDetections(cv::Mat& img)

{

std::vector<Blaze::PalmDetection> beforeNMSResults;

std::vector<Blaze::PalmDetection> afterNMSResults;

std::vector<float> scores;

std::vector<int> indices;

std::vector<cv::Rect> boundingBoxes;

cv::Mat tensor;

img.convertTo(tensor, CV_32F);

tensor = tensor / 127.5 - 1;

cv::Mat blob = cv::dnn::blobFromImage(tensor, 1.0, tensor.size(), 0, false, false, CV_32F);

std::vector<cv::String> outNames(2);

outNames[0] = "regressors";

outNames[1] = "classificators";

blazePalm.setInput(blob);

std::vector<cv::Mat> outputs;

blazePalm.forward(outputs, outNames);

cv::Mat classificator = outputs[0];

cv::Mat regressor = outputs[1];

for (int y = 0; y < 16; ++y) {

for (int x = 0; x < 16; ++x) {

for (int a = 0; a < 2; ++a) {

PalmDetection res = GetPalmDetection(regressor, classificator, 8, 2, x, y, a, 0);

if (res.score != 0)

{

beforeNMSResults.push_back(res);

cv::Point2d startPt = cv::Point2d(res.xmin * blazePalmSize, res.ymin * blazePalmSize);

cv::Point2d endPt = cv::Point2d(res.xmax * blazePalmSize, res.ymax * blazePalmSize);

boundingBoxes.push_back(cv::Rect(startPt, endPt));

scores.push_back(res.score);

}

}

}

}

for (int y = 0; y < 8; ++y) {

for (int x = 0; x < 8; ++x) {

for (int a = 0; a < 6; ++a) {

PalmDetection res = GetPalmDetection(regressor, classificator, 16, 6, x, y, a, 512);

if (res.score != 0)

{

beforeNMSResults.push_back(res);

cv::Point2d startPt = cv::Point2d(res.xmin * blazePalmSize, res.ymin * blazePalmSize);

cv::Point2d endPt = cv::Point2d(res.xmax * blazePalmSize, res.ymax * blazePalmSize);

boundingBoxes.push_back(cv::Rect(startPt, endPt));

scores.push_back(res.score);

}

}

}

}

cv::dnn::NMSBoxes(boundingBoxes, scores, palmMinScoreThresh, palmMinNMSThresh, indices);

for (int i = 0; i < indices.size(); i++) {

int idx = indices[i];

afterNMSResults.push_back(beforeNMSResults[idx]);

}

return afterNMSResults;

}

Blaze::PalmDetection Blaze::GetPalmDetection(cv::Mat regressor, cv::Mat classificator,

int stride, int anchor_count, int column, int row, int anchor, int offset) {

Blaze::PalmDetection res;

int index = (int(row * 128 / stride) + column) * anchor_count + anchor + offset;

float origin_score = regressor.at<float>(0, index, 0);

float score = sigmoid(origin_score);

if (score < palmMinScoreThresh) return res;

float x = classificator.at<float>(0, index, 0);

float y = classificator.at<float>(0, index, 1);

float w = classificator.at<float>(0, index, 2);

float h = classificator.at<float>(0, index, 3);

x += (column + 0.5) * stride - w / 2;

y += (row + 0.5) * stride - h / 2;

res.ymin = (y) / blazePalmSize;

res.xmin = (x) / blazePalmSize;

res.ymax = (y + h) / blazePalmSize;

res.xmax = (x + w) / blazePalmSize;

//if ((res.ymin < 0) || (res.xmin < 0) || (res.xmax > 1) || (res.ymax > 1)) return res;

res.score = score;

std::vector<cv::Point2d> kpts;

for (int key_id = 0; key_id < palmMinNumKeyPoints; key_id++)

{

float kpt_x = classificator.at<float>(0, index, 4 + key_id * 2);

float kpt_y = classificator.at<float>(0, index, 5 + key_id * 2);

kpt_x += (column + 0.5) * stride;

kpt_x = kpt_x / blazePalmSize;

kpt_y += (row + 0.5) * stride;

kpt_y = kpt_y / blazePalmSize;

//UE_LOG(LogTemp, Log, TEXT("kpt id(%d) : (%f, %f)"), key_id, kpt_x, kpt_y);

res.kp_arr[key_id] = cv::Point2d(kpt_x, kpt_y);

}

return res;

}

float Blaze::sigmoid(float x) {

return 1 / (1 + exp(-x));

}

std::vector<Blaze::PalmDetection> Blaze::DenormalizePalmDetections(std::vector<Blaze::PalmDetection> detections, int width, int height, cv::Scalar pad)

{

std::vector<Blaze::PalmDetection> denormDets;

int scale = 0;

if (width > height)

scale = width;

else

scale = height;

for (auto& det : detections)

{

Blaze::PalmDetection denormDet;

denormDet.ymin = det.ymin * scale - pad[0];

denormDet.xmin = det.xmin * scale - pad[1];

denormDet.ymax = det.ymax * scale - pad[0];

denormDet.xmax = det.xmax * scale - pad[1];

denormDet.score = det.score;

for (int i = 0; i < palmMinNumKeyPoints; i++)

{

cv::Point2d pt_new = cv::Point2d(det.kp_arr[i].x * scale - pad[1], det.kp_arr[i].y * scale - pad[0]);

//UE_LOG(LogTemp, Log, TEXT("denorm kpt id(%d) : (%f, %f)"), i, pt_new.x, pt_new.y);

denormDet.kp_arr[i] = pt_new;

}

denormDets.push_back(denormDet);

}

return denormDets;

}

void Blaze::DrawPalmDetections(cv::Mat& img, std::vector<Blaze::PalmDetection> denormDets)

{

for (auto& denormDet : denormDets)

{

cv::Point2d startPt = cv::Point2d(denormDet.xmin, denormDet.ymin);

cv::Point2d endPt = cv::Point2d(denormDet.xmax, denormDet.ymax);

cv::rectangle(img, cv::Rect(startPt, endPt), cv::Scalar(255, 0, 0), 1);

for (int i = 0; i < palmMinNumKeyPoints; i++)

cv::circle(img, denormDet.kp_arr[i], 5, cv::Scalar(255, 0, 0), -1);

std::string score_str = std::to_string(static_cast<int>(denormDet.score * 100))+ "%";

cv::putText(img, score_str, cv::Point(startPt.x, startPt.y - 20), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 255), 2);

}

}

void Blaze::DrawDetsInfo(cv::Mat& img, std::vector<Blaze::PalmDetection> filteredDets, std::vector<Blaze::PalmDetection> normDets, std::vector<Blaze::PalmDetection> denormDets)

{

//puttext num of filtered/denorm dets

std::string dets_size_str = "filtered dets : " + std::to_string(filteredDets.size()) + ", norm dets : " + std::to_string(normDets.size()) + ", denorm dets : " + std::to_string(denormDets.size());

cv::putText(img, dets_size_str, cv::Point(30, 30), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(255, 0, 0), 2);

for (int i = 0; i < filteredDets.size(); i++)

{

auto& det = filteredDets.at(i);

std::ostringstream oss;

oss << "filteredDets : (" << det.xmin << ", " << det.ymin << "),(" <<

det.xmax << ", " << det.ymax << ")";

std::string det_str = oss.str();

cv::putText(img, det_str, cv::Point(30, 50 + 20 * i), cv::FONT_HERSHEY_SIMPLEX, 0.4, cv::Scalar(0, 0, 255), 2);

}

}

std::vector<Blaze::PalmDetection> Blaze::FilteringDets(std::vector<Blaze::PalmDetection> detections, int width, int height)

{

std::vector<Blaze::PalmDetection> filteredDets;

for (auto& denormDet : detections)

{

cv::Point2d startPt = cv::Point2d(denormDet.xmin, denormDet.ymin);

if (startPt.x < 10 || startPt.y < 10)

continue;

if (startPt.x > width || startPt.y > height)

continue;

int w = denormDet.xmax - denormDet.xmin;

int y = denormDet.ymax - denormDet.ymin;

if ((w * y < 40 * 40 )|| (w * y > (width * 0.7) * (height * 0.7)))

continue;

filteredDets.push_back(denormDet);

}

return filteredDets;

}

std::vector<cv::Rect> Blaze::convertHandRects(std::vector<PalmDetection> filteredDets)

{

std::vector<cv::Rect> handRects;

for (auto& det : filteredDets)

{

int width = det.xmax - det.xmin;

int height = det.ymax - det.ymin;

int large_length = 0;

int center_x = static_cast<int>((det.xmax + det.xmin) / 2);

int center_y = static_cast<int>((det.ymax + det.ymin) / 2);

if (width > height)

large_length = height * blazeHandDScale;

else

large_length = height * blazeHandDScale;

int start_x = center_x - static_cast<int>(large_length / 2);

int start_y = center_y - static_cast<int>(large_length / 2) - large_length * 0.1;

int end_x = center_x + static_cast<int>(large_length / 2);

int end_y = center_y + static_cast<int>(large_length / 2) - large_length * 0.1;

start_x = std::max(start_x, 0);

start_y = std::max(start_y, 0);

end_x = std::min(end_x, webcamWidth);

end_y = std::min(end_y, webcamHeight);

cv::Point2d startPt = cv::Point2d(start_x, start_y);

cv::Point2d endPt = cv::Point2d(end_x, end_y);

cv::Rect handRect(startPt, endPt);

handRects.push_back(handRect);

}

return handRects;

}

void Blaze::DrawRects(cv::Mat& img, std::vector<cv::Rect> rects)

{

for (auto& rect : rects)

{

cv::rectangle(img, rect, cv::Scalar(255, 255, 255), 1);

}

}

void Blaze::GetHandImages(cv::Mat& img, std::vector<cv::Rect> rects, std::vector<cv::Mat>& handImgs)

{

for (auto& rect : rects)

{

//std::cout << "img size : " << img.size() << ", rect : " << rect << std::endl;

//cv::Mat roi(cv::Size(rect.width, rect.height), img.type(), cv::Scalar(0, 0, 0))

cv::Mat roi = img(rect).clone();

cv::cvtColor(roi, roi, cv::COLOR_BGR2RGB);

cv::resize(roi, roi, cv::Size(blazeHandSize, blazeHandSize));

handImgs.push_back(roi);

}

}

std::vector<cv::Mat> Blaze::PredictHandDetections(std::vector<cv::Mat>& imgs)

{

std::vector<cv::Mat> imgs_landmarks;

for (auto& img : imgs)

{

cv::Mat tensor;

img.convertTo(tensor, CV_32F);

tensor = tensor / blazeHandSize;

cv::Mat blob = cv::dnn::blobFromImage(tensor, 1.0, tensor.size(), 0, false, false, CV_32F);

std::vector<cv::String> outNames(3);

outNames[0] = "hand_flag";

outNames[1] = "handedness";

outNames[2] = "landmarks";

blazeHand.setInput(blob);

std::vector<cv::Mat> outputs;

blazeHand.forward(outputs, outNames);

cv::Mat handflag = outputs[0];

cv::Mat handedness = outputs[1];

cv::Mat landmarks = outputs[2];

imgs_landmarks.push_back(landmarks);

}

return imgs_landmarks;

}

std::vector<cv::Mat> Blaze::DenormalizeHandLandmarks(std::vector<cv::Mat> imgs_landmarks, std::vector<cv::Rect> rects)

{

std::vector<cv::Mat> denorm_imgs_landmarks;

for (int i = 0; i < imgs_landmarks.size(); i++)

{

cv::Mat landmarks = imgs_landmarks.at(i);

cv::Rect rect = rects.at(i);

cv::Mat squeezed = landmarks.reshape(0, landmarks.size[1]);

//std::cout << "squeezed size: " << squeezed.size[0] << "," << squeezed.size[1] << std::endl;

for (int j = 0; j < squeezed.size[0]; j++)

{

squeezed.at<float>(j, 0) = squeezed.at<float>(j, 0) * rect.width + rect.x;

squeezed.at<float>(j, 1) = squeezed.at<float>(j, 1) * rect.height + rect.y;

squeezed.at<float>(j, 2) = squeezed.at<float>(j, 2) * rect.height * -1;

}

denorm_imgs_landmarks.push_back(squeezed);

}

//std::cout << std::endl;

return denorm_imgs_landmarks;

}

void Blaze::DrawHandDetections(cv::Mat img, std::vector<cv::Mat> denorm_imgs_landmarks)

{

for (auto& denorm_landmarks : denorm_imgs_landmarks)

{

for (int i = 0; i < denorm_landmarks.size[0]; i++)

{

float x = denorm_landmarks.at<float>(i, 0);

float y = denorm_landmarks.at<float>(i, 1);

cv::Point pt(x, y);

cv::circle(img, pt, 5, cv::Scalar(0, 255, 0), -1);

}

for (auto& connection : HAND_CONNECTIONS)

{

int startPtIdx = connection[0];

int endPtIdx = connection[1];

float startPtX = denorm_landmarks.at<float>(startPtIdx, 0);

float startPtY = denorm_landmarks.at<float>(startPtIdx, 1);

float endPtX = denorm_landmarks.at<float>(endPtIdx, 0);

float endPtY = denorm_landmarks.at<float>(endPtIdx, 1);

cv::line(img, cv::Point(startPtX, startPtY), cv::Point(endPtX, endPtY), cv::Scalar(255, 255, 255), 3);

}

}

}

std::vector<cv::Mat> Blaze::DenormalizeHandLandmarksForBoneLocation(std::vector<cv::Mat> imgs_landmarks, std::vector<cv::Rect> rects)

{

std::vector<cv::Mat> denorm_imgs_landmarks;

for (int i = 0; i < imgs_landmarks.size(); i++)

{

/*

denrom lms for img drawing

*/

cv::Mat landmarks = imgs_landmarks.at(i);

cv::Rect rect = rects.at(i);

cv::Mat squeezed_for_origin = landmarks.reshape(0, landmarks.size[1]).clone();

cv::Mat squeezed_for_img = landmarks.reshape(0, landmarks.size[1]).clone();

//std::cout << "squeezed size: " << squeezed.size[0] << "," << squeezed.size[1] << std::endl;

for (int j = 0; j < squeezed_for_img.size[0]; j++)

{

squeezed_for_img.at<float>(j, 0) = squeezed_for_img.at<float>(j, 0) * rect.width + rect.x;

squeezed_for_img.at<float>(j, 1) = squeezed_for_img.at<float>(j, 1) * rect.height + rect.y;

squeezed_for_img.at<float>(j, 2) = squeezed_for_img.at<float>(j, 2) * rect.height * -1;

}

denorm_imgs_landmarks.push_back(squeezed_for_img);

/*

denrom lms for bone location

*/

cv::Mat squeezed_for_bone = landmarks.reshape(0, landmarks.size[1]).clone();

bool isLeft = IsLeftHand(squeezed_for_bone);

//UE_LOG(LogTemp, Log, TEXT("isLeft : %d"), isLeft);

//UE_LOG(LogTemp, Log, TEXT("squeezed_for_bone 4 xy : %f, %f"), squeezed_for_bone.at<float>(4, 0), squeezed_for_bone.at<float>(4, 1));

//UE_LOG(LogTemp, Log, TEXT("squeezed_for_bone 20 xy : %f, %f"), squeezed_for_bone.at<float>(20, 0), squeezed_for_bone.at<float>(20, 1));

if (isLeft)

{

for (int j = 0; j < squeezed_for_bone.size[0]; j++)

{

squeezed_for_bone.at<float>(j, 0) = (squeezed_for_origin.at<float>(j, 1) - squeezed_for_origin.at<float>(0, 1)) * skeletonXRatio * 2 * -1;

squeezed_for_bone.at<float>(j, 1) = (squeezed_for_origin.at<float>(j, 0) - 0.5) * skeletonYRatio * 2;

squeezed_for_bone.at<float>(j, 2) = squeezed_for_origin.at<float>(j, 2) * skeletonYRatio * 3 * -1;

//UE_LOG(LogTemp, Log, TEXT("squeezed_for_bone %d : %f, %f, %f"), j, squeezed_for_bone.at<float>(j, 1), squeezed_for_bone.at<float>(j, 2), squeezed_for_bone.at<float>(j, 3));

}

handLeft = squeezed_for_bone.clone();

handLeftImg = squeezed_for_img.clone();

}

else

{

for (int j = 0; j < squeezed_for_bone.size[0]; j++)

{

squeezed_for_bone.at<float>(j, 0) = (squeezed_for_origin.at<float>(j, 1) - squeezed_for_origin.at<float>(0, 1)) * skeletonXRatio * 2;

squeezed_for_bone.at<float>(j, 1) = (squeezed_for_origin.at<float>(j, 0) - 0.5) * skeletonYRatio * 2;

squeezed_for_bone.at<float>(j, 2) = squeezed_for_origin.at<float>(j, 2) * skeletonYRatio * 3;

//UE_LOG(LogTemp, Log, TEXT("squeezed_for_bone %d : %f, %f, %f"), j, squeezed_for_bone.at<float>(j, 1), squeezed_for_bone.at<float>(j, 2), squeezed_for_bone.at<float>(j, 3));

}

handRight = squeezed_for_bone.clone();

handRightImg = squeezed_for_img.clone();

}

//UE_LOG(LogTemp, Log, TEXT("handLeft rows : %d, cols : %d"), handLeft.size[0], handLeft.size[1]);

}

//std::cout << std::endl;

return denorm_imgs_landmarks;

}

bool Blaze::IsLeftHand(cv::Mat normalizedLandmarks)

{

if (normalizedLandmarks.at<float>(4, 0) < normalizedLandmarks.at<float>(20, 0))

return true;

return false;

}

'컴퓨터과학 > 언리얼' 카테고리의 다른 글

| HandDesktop19 - 손을 카메라 앞에 놓기, 카메라와 손 통합 (0) | 2024.02.05 |

|---|---|

| HandDesktop18 - 카메라 중점, 줌 거리 찾기 (0) | 2024.02.05 |

| HandDesktop16 - 손 관절 가지고 놀기4 rpy 대신 좌표 다루기 구상 (0) | 2024.02.02 |

| HandDesktop15 - 손 관절 가지고 놀기3 손이동 구현 (0) | 2024.02.01 |

| HandDesktop14 - 손 관절 가지고 놀기2 컴포넌트공간 관절회전 (0) | 2024.02.01 |